App Details

Description

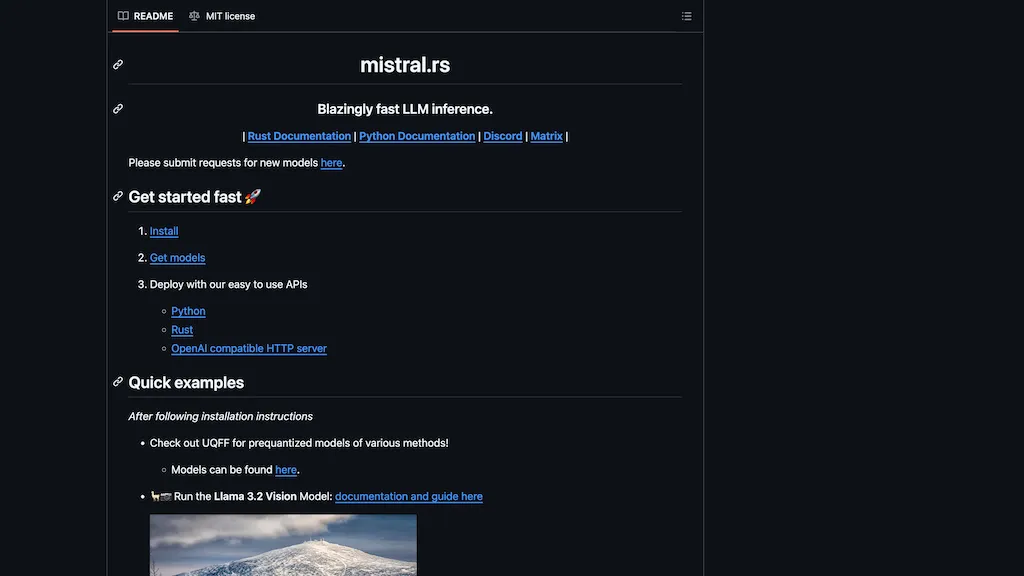

Mistral.rs is a highly efficient large language model (LLM) inference tool optimized for speed and versatility.It supports multiple frameworks, including Python and Rust, and offers an OpenAI-compatible API server for straightforward integration. Key features include in-place quantization for seamless use of Hugging Face models, multi-device mapping (CPU/GPU) for flexible resource allocation, and an extensive range of quantization options (from 2-bit to 8-bit). It allows running various models, from text-based to vision and diffusion models, and includes advanced capabilities like LoRA adapters, paged attention, and continuous batching.With support for Apple silicon, CUDA, and Metal, it provides versatile deployment options on diverse hardware setups, making it ideal for developers needing scalable, high-speed LLM operations.

Technical Details

Review

Write a ReviewThere are no reviews yet.