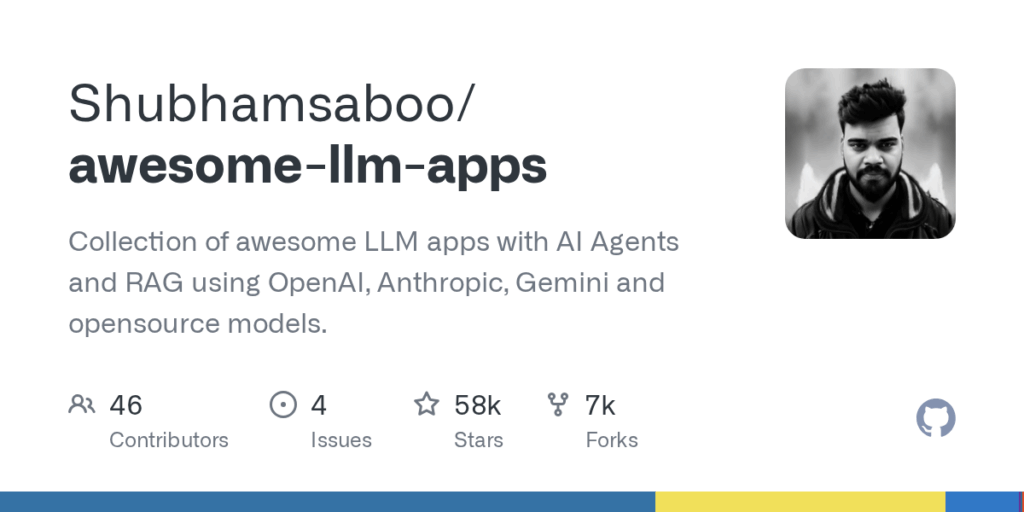

awesome llm apps

Basic Information

A curated, searchable collection of example LLM-powered applications, tutorials, and starter templates demonstrating agentic patterns such as retrieval-augmented generation (RAG), AI Agents, multi-agent teams, MCP, voice agents, memory-enabled apps, and fine-tuning workflows. The repository aggregates projects that use models from OpenAI, Anthropic, Google/Gemini and open-source models like DeepSeek, Qwen, and Llama and includes apps designed to run locally or in cloud environments. It is organized into focused sections including starter agents, advanced agents, autonomous game-playing agents, multi-agent teams, voice agents, MCP agents, RAG tutorials, memory tutorials, chat-with-X guides, and fine-tuning examples. Each listed project generally contains its own README, dependencies, and setup steps. The collection is intended as a practical resource for developers, researchers, and engineers seeking hands-on examples, reproducible demos, and learning materials for building and experimenting with LLM-powered applications.