anything llm

Basic Information

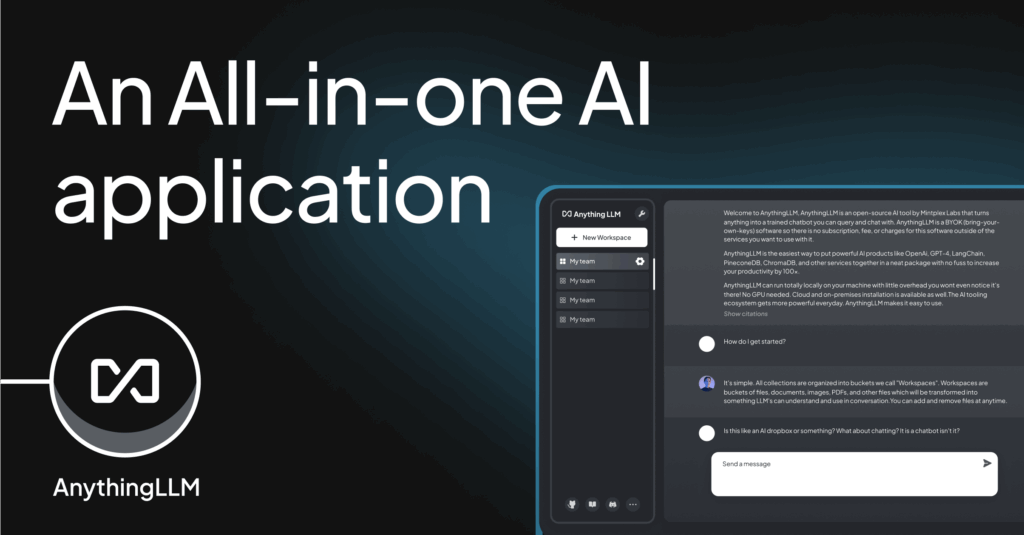

anything-llm is an all-in-one AI application intended to run on desktop environments and in Docker containers. It provides an integrated suite for building, running, and experimenting with AI agents and retrieval-augmented generation (RAG) workflows. The project includes a no-code agent builder to lower the barrier for composing agent behaviors and pipelines and advertises compatibility with MCP to enable interoperability with other agent systems. The repository is positioned as a single package combining hosting, agent creation, and RAG capabilities so users can prototype, test, and deploy agent-driven applications locally or in containerized deployments.

Links

Stars

47916

Github Repository

Categorization

App Details

Features

The README and repo description highlight a desktop application plus a Docker-ready deployment model. Built-in RAG support is listed as a core capability for augmenting model outputs with retrieved information. The project bundles AI agents and a no-code agent builder to compose agent logic without programming. MCP compatibility is noted to allow integration or interoperability with external agent ecosystems. The overall feature set is presented as an all-in-one stack that combines hosting, agent authoring, and retrieval capabilities in a single project.

Use Cases

anything-llm simplifies creating and running AI agents by combining hosting and tooling into one package that can run locally on a desktop or inside Docker. The no-code agent builder helps non-developers prototype agent behaviors without writing code. Built-in RAG enables agents to use external documents or knowledge sources to improve responses. Docker support aids reproducible deployments and easy distribution. MCP compatibility is intended to facilitate connecting the application to other agent tools or workflows. Together these aspects reduce setup time and lower the barrier to experimenting with agent-driven applications.