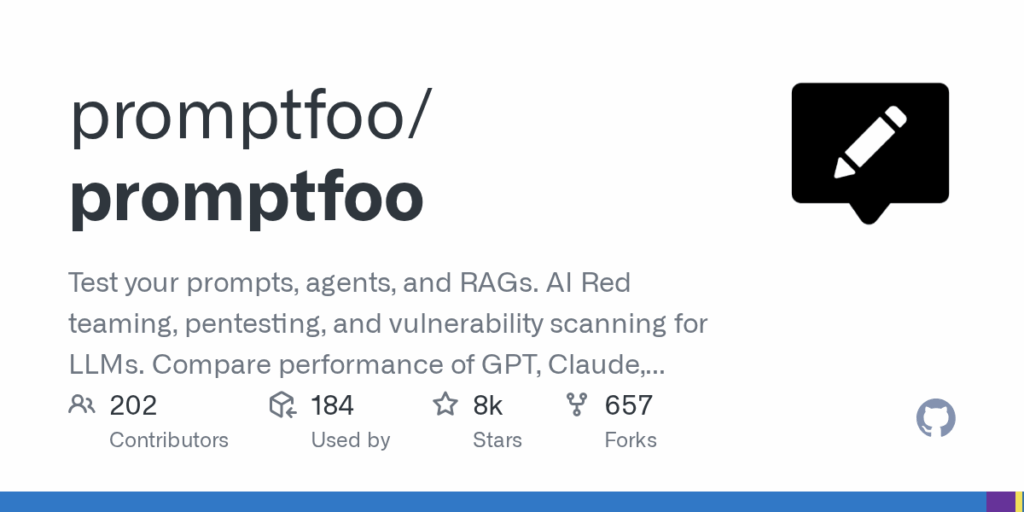

promptfoo

Basic Information

promptfoo is a developer-focused tool for testing, evaluating, and hardening LLM applications. It provides a local-first CLI and Node package to run automated prompt and model evaluations, compare model outputs side-by-side across providers, and perform red teaming and vulnerability scanning to generate security reports. The project is designed to reduce trial-and-error during LLM development and to help teams ship more secure, reliable AI apps. It supports integration into CI/CD pipelines, runs entirely on the developer's machine so prompts and data remain private, and is documented with getting-started guides and red-team guidance. The README highlights support for multiple model providers and emphasizes a data-driven approach to drive decisions about prompts and models.