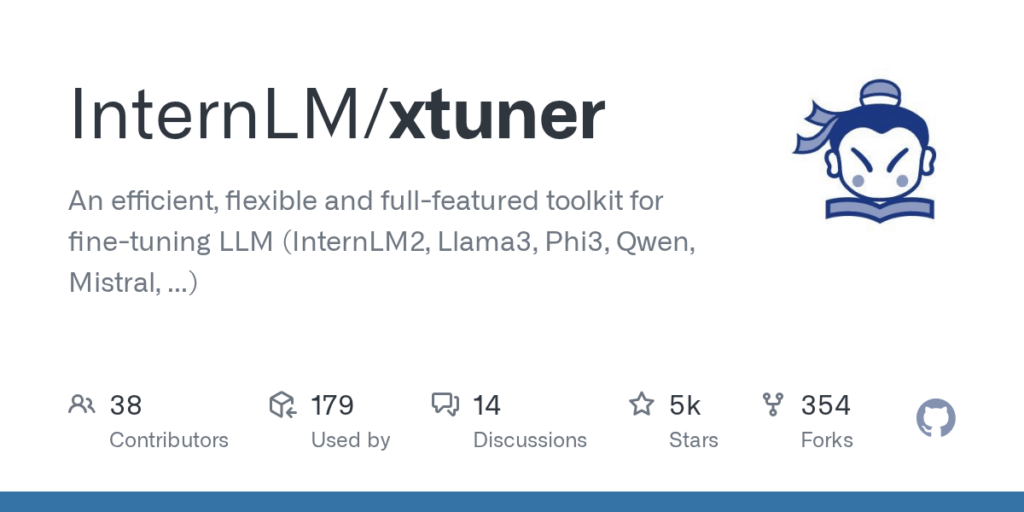

xtuner

Basic Information

XTuner is an open-source toolkit designed to efficiently and flexibly fine-tune large language models (LLMs) and vision-language models (VLMs). It provides end-to-end support for pre-training, instruction fine-tuning, agent fine-tuning, chatting with models, model conversion, merging adapters, and deployment preparation. The project targets a wide range of model sizes and hardware setups, allowing fine-tuning of 7B models on a single 8GB GPU and scaling to multi-node training for models exceeding 70B. It integrates high-performance operators and can use DeepSpeed optimizations to reduce memory and speed up training. XTuner includes ready-to-use configurations and data pipelines that accommodate many dataset formats. The toolkit supports multiple training algorithms such as QLoRA, LoRA and full-parameter fine-tuning and provides utilities to convert trained checkpoints into Hugging Face format for downstream deployment and evaluation.