cognita

Basic Information

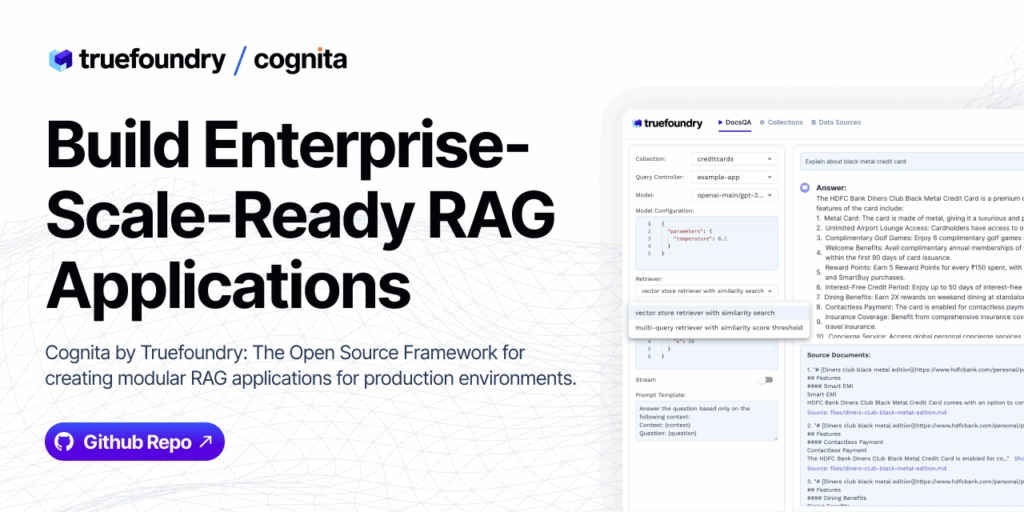

Cognita is an open-source framework to organize, develop and productionize Retrieval-Augmented Generation (RAG) applications. It provides a modular codebase that separates parsers, loaders, embedders, retrievers, indexing jobs and an API server so teams can move from notebook prototypes to deployable services. Cognita includes a FastAPI backend, a frontend UI for no-code ingestion and querying, an LLM Gateway to proxy different model providers, and support for vector databases such as Qdrant and SingleStore. It supports local development via docker-compose and production deployment with Truefoundry, a metadata store backed by Prisma/Postgres, incremental indexing, and configurable model providers. The repo is intended for developers and teams building document search and Q&A systems who need a reusable, extensible RAG architecture and an optional UI for non-technical users.