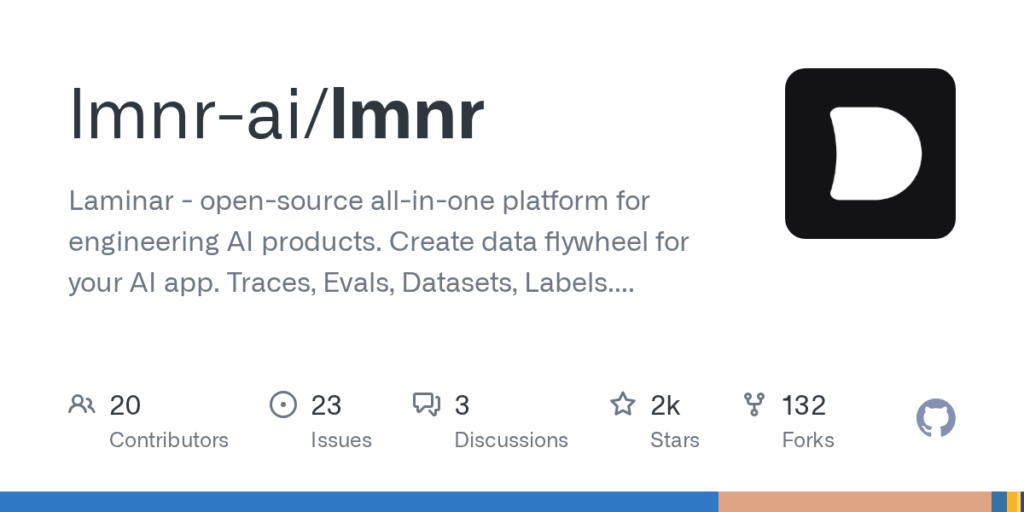

lmnr

Basic Information

Laminar (lmnr) is an open-source platform for tracing and evaluating AI applications aimed at engineering teams and developers. It provides instrumentation SDKs and integrations for common AI frameworks and SDKs to automatically capture call traces, inputs and outputs, latency, cost and token counts. The project supports both TypeScript and Python client libraries and offers quickstart examples showing how to initialize tracing and annotate functions with an observe wrapper or decorator. Laminar can be self-hosted via Docker Compose to run a lightweight stack or used via a managed platform. The backend is implemented in Rust for performance, traces are sent over gRPC, and the system includes components such as a message queue, databases and a frontend for dashboards.