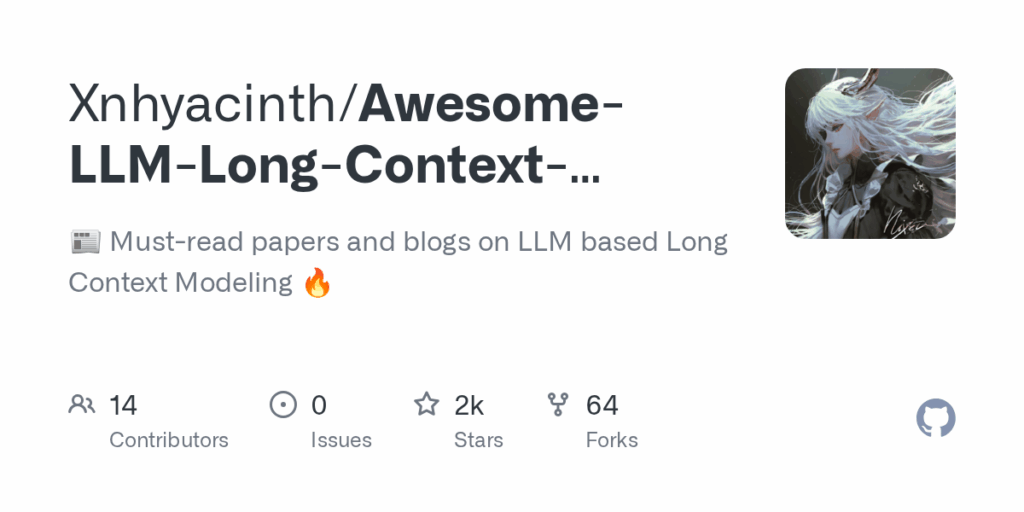

Awesome LLM Long Context Modeling

Basic Information

This repository is a curated, community-maintained collection of papers, blogs, technical reports and project links focused on long-context modeling for large language models. It aggregates recent and historical research across many subtopics that enable LLMs to handle extremely long inputs and outputs, including efficient attention patterns, KV-cache management and compression, length extrapolation, recurrent and state-space models, long-term memory, retrieval-augmented generation, context compression, long-chain-of-thought methods, long-text and long-video/image modeling, agentic systems, speculative decoding and benchmarks. The README is organized as a structured bibliography with topical sections, weekly and monthly paper updates, highlighted surveys and must-read items, and pointers to related repositories and evaluation suites. The repo serves as a centralized discovery hub and ongoing index of scholarly and engineering work around extending and evaluating LLM context windows.