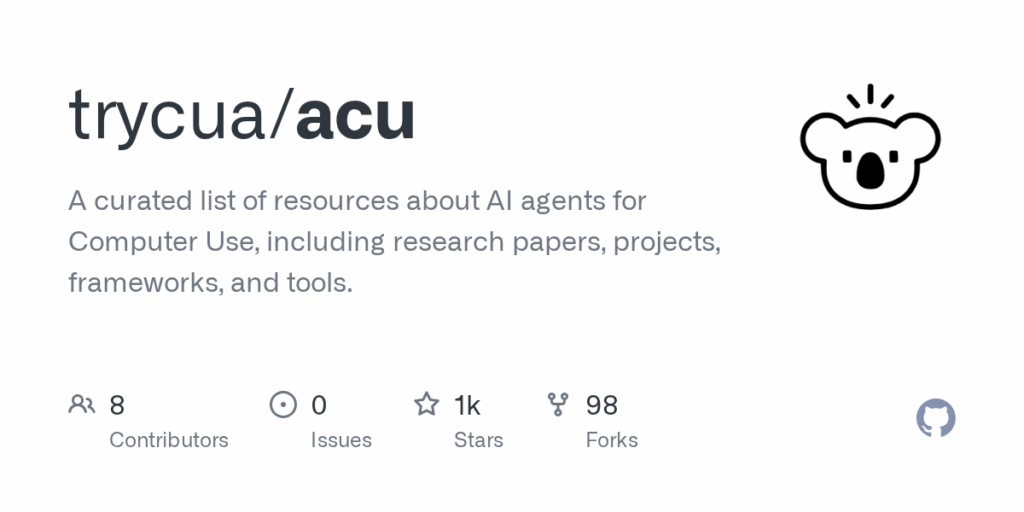

acu

Basic Information

This repository is a curated, living index of resources about AI agents for computer use. It aggregates articles, survey and research papers, frameworks and models, UI grounding methods, datasets, benchmarks, safety analyses, open source projects, commercial offerings, environment and sandbox tooling, and automation libraries. The README organizes content into topical sections such as Articles, Papers (Surveys, Frameworks & Models, UI Grounding, Dataset, Benchmark, Safety), Projects (Open Source, Environment & Sandbox, Automation) and Commercial offerings. Entries often include links to code, papers, datasets and websites where available. The collection is intended to help researchers, developers, and practitioners discover recent work and tooling in areas like vision-language grounding, web and mobile agent design, trajectory synthesis datasets, and agent evaluation benchmarks. The repository also documents contribution instructions and highlights representative projects and benchmarks.