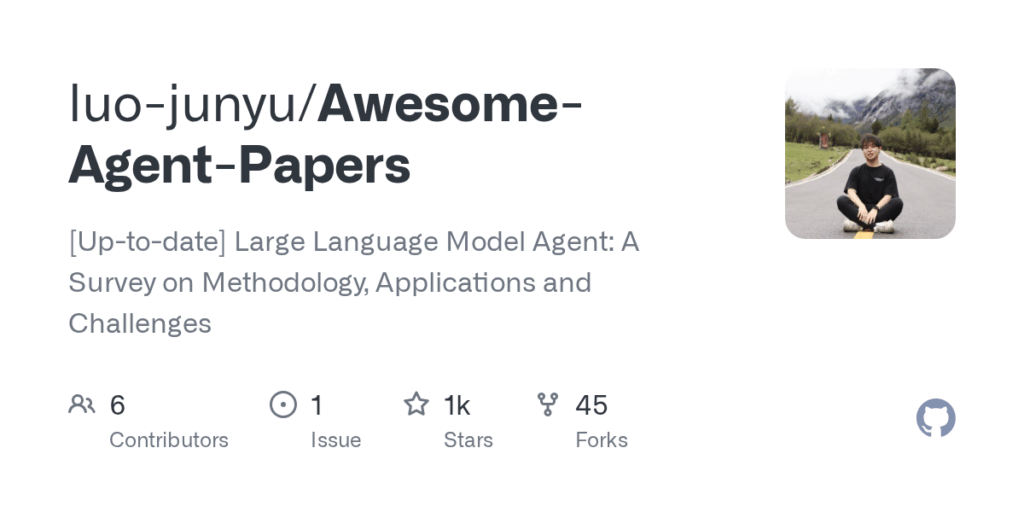

Awesome Agent Papers

Basic Information

This repository is a curated, regularly updated collection of research papers and resources about Large Language Model (LLM) agents. It compiles a broad survey and annotated bibliographies across core themes such as agent construction, multi-agent collaboration, agent evolution, tools and tool use, security, benchmarks, datasets, ethics, and real-world applications. The repo includes a taxonomy and an overview figure to help readers orient within the field, and it highlights an accompanying survey paper (arXiv:2503.21460). It is intended as a central reference for researchers, graduate students, and practitioners seeking literature, benchmarks, and design patterns for LLM-based agents. The maintainers invite contributions via pull requests or issues and provide citation information for the survey. The content is organized with a table of contents and topical sections to facilitate discovery and navigation.