py gpt

Basic Information

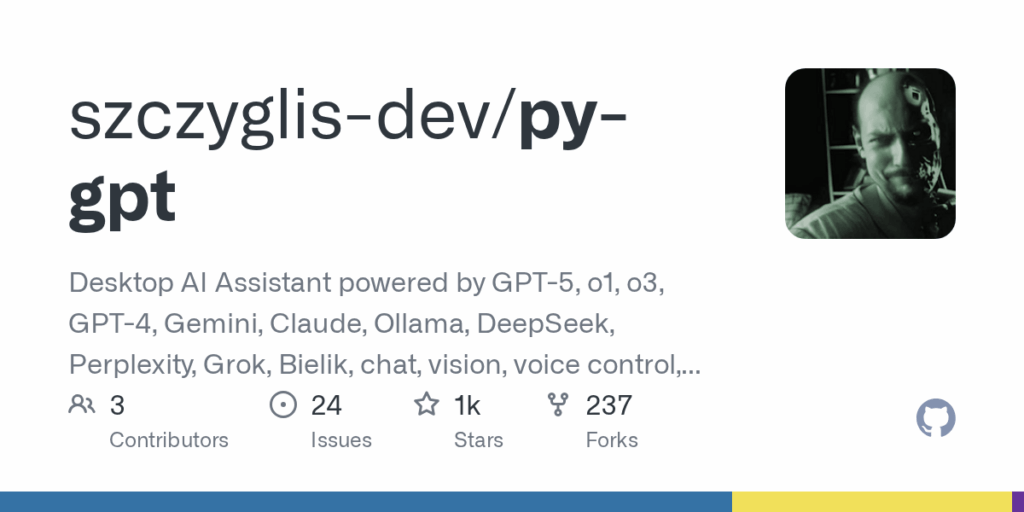

This repository provides a desktop AI assistant named py-gpt and is intended to bring multimodal AI interactions to a desktop environment by leveraging a variety of large language models and services. The project description lists support for numerous model backends including GPT-5, o1, o3, GPT-4, Gemini, Claude, Ollama, DeepSeek, Perplexity, Grok, and Bielik. The assistant is described as offering chat, vision, and voice capabilities, so its main purpose is to unify access to multiple model providers and modalities in a single desktop application. The provided README content does not include installation instructions, UI details, or concrete integration examples, so platform specifics and implementation details are not documented in the available content.