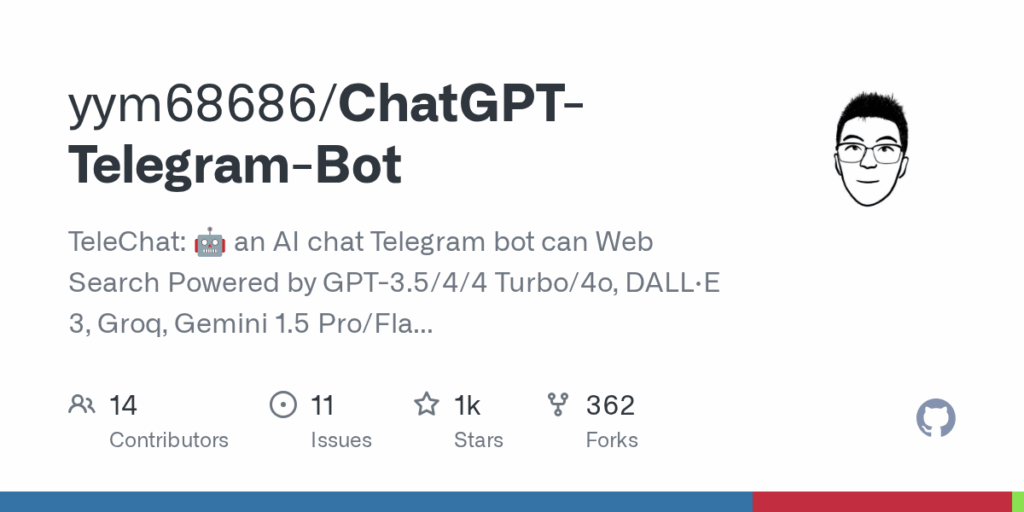

ChatGPT Telegram Bot

Basic Information

This repository provides TeleChat, a full Telegram bot implementation that connects Telegram chats to a variety of large language models and related multimodal services. It is designed to let users interact with models such as GPT-3.5/4/4o/5, DALL·E 3, Claude 2/3 series, Gemini 1.5, Groq Mixtral, LLaMA2-70b and other supported backends directly from Telegram. The project bundles a plugin architecture and a separate submodule for API request and conversation management. It includes extensive configuration via environment variables, support for conversation isolation and multi-user modes, and detailed deployment instructions for Docker, Replit, Koyeb, Zeabur and fly.io. The codebase targets operators who want an easy-to-deploy, extensible Telegram interface to multiple LLM providers and multimodal capabilities, while giving end users a conversational assistant inside Telegram.