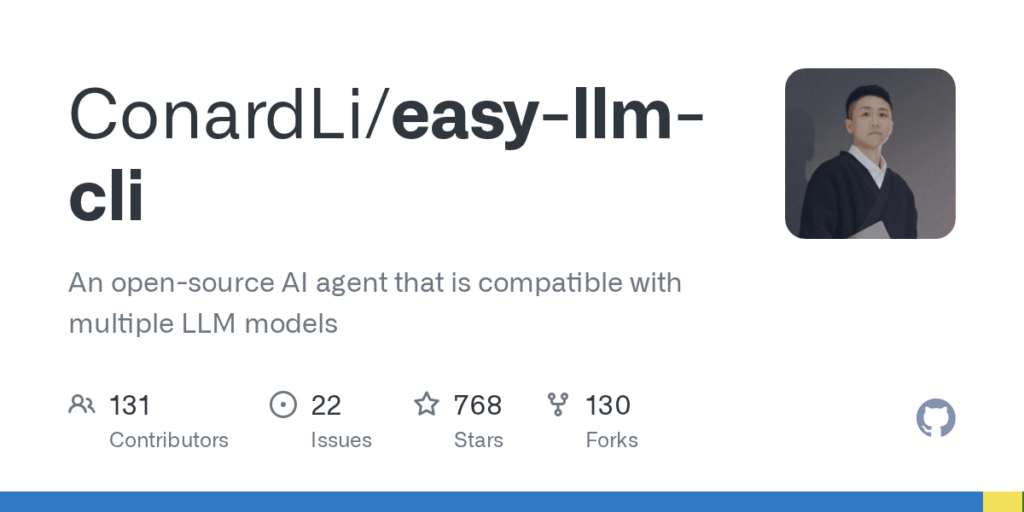

easy llm cli

Basic Information

Easy LLM CLI is an open-source command-line AI agent and workflow tool designed to help developers and technical users interact with codebases and automation tasks using large language models. It connects to multiple LLM providers including Google Gemini and OpenAI, and supports any OpenAI-compatible custom LLM via environment configuration. The project provides both an interactive CLI (invoked via npx easy-llm-cli or a global elc install) and a programmatic API (ElcAgent) for embedding agent behavior into Node.js projects. It supports tool calling, multimodal inputs, large context windows, and MCP server extensions to connect external capabilities. The README documents quickstart steps requiring Node.js v20 or later, examples for creating or analyzing projects, and configuration options for switching providers without changing workflows.