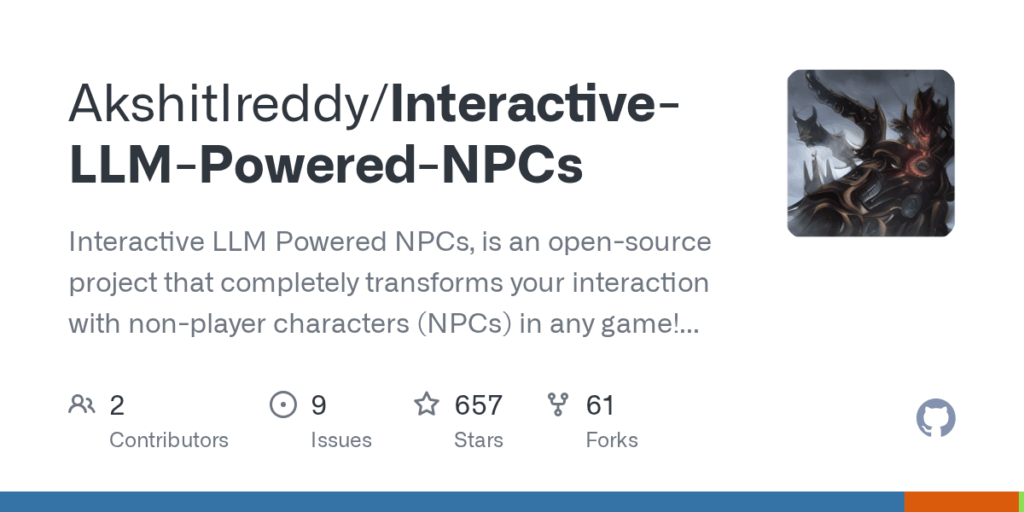

Interactive LLM Powered NPCs

Basic Information

Interactive LLM Powered NPCs is an open source project that enables natural, voice-driven conversations with non-player characters in existing games without modifying game source code. It captures microphone input, transcribes speech, identifies the target NPC using facial recognition, and generates context-aware responses via a large language model that draws on public world data and character-specific vector databases. The system produces speech and synchronized facial animation using SadTalker and text to speech, then replaces or overlays the game's face pixels so characters appear to speak. The project includes templates, Jupyter notebooks, and a folder structure for adding new games and characters, and lists prerequisites and installation steps such as Python 3.10.6, FFmpeg, and Cohere API integration. It targets open world titles like Cyberpunk 2077, Assassin's Creed, and GTA 5 to add immersive dialogue capabilities.