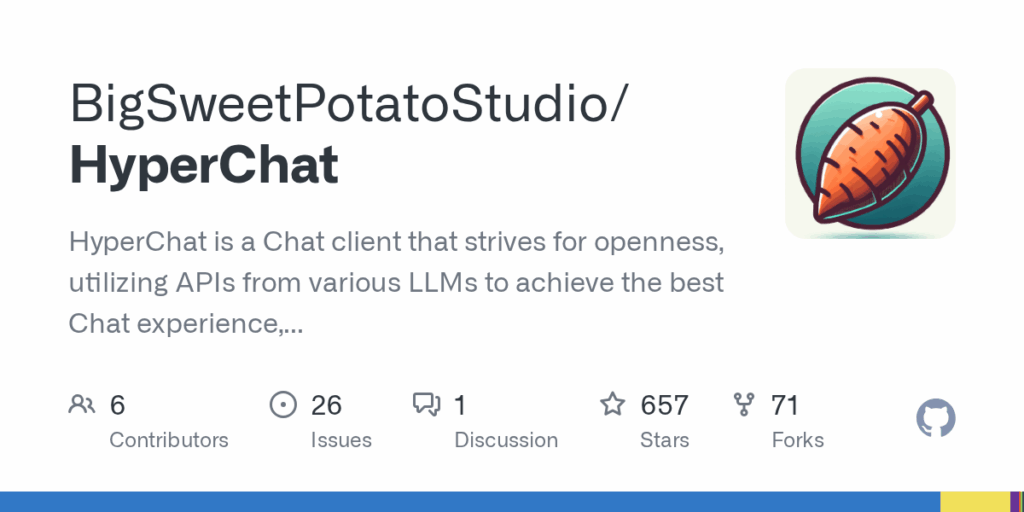

Features

Cross-platform desktop and web support for Windows, macOS and Linux with an H5 web interface and optional Docker images. Quick command-line run via npx with a default port and password and Docker pulls for containerized usage. Multi-LLM backend compatibility and explicit LLM usability notes for providers such as OpenAI, Claude, Qwen and others. Full MCP support and an MCP extension system to install third-party MCPs and call tools. HyperPrompt syntax with variables, real-time preview and basic syntax checking. Agent definitions, scheduled task lists to send messages to agents, and support for invoking agents via @. WebDAV incremental sync, RAG knowledge base, multi-chat workspace (ChatSpace), model comparison in chat, artifacts and rich rendering (SVG, HTML, Mermaid, KaTeX), resources/prompts/tools management, and UI features like dark mode.

Use Cases

For end users the repo provides a flexible chat client that supports multiple LLM backends so teams or individuals can compare and switch models in conversation. Agent features and scheduled tasks let users automate recurring actions and delegate work to predefined agents. RAG tied to an MCP knowledge base improves retrieval and context for answers. HyperPrompt and prompt resources speed prompt engineering with live previews. WebDAV sync and web access make conversations and resources available across devices and deployments. Tool integrations and MCP tool prompts enable invoking web and command-line tools, inspecting files (including ASAR analysis), calling web search, using map MCPs and publishing webpages, which helps combine browsing, scraping and automated workflows inside a conversational UI. The project also supplies developer setup instructions for electron and web builds.