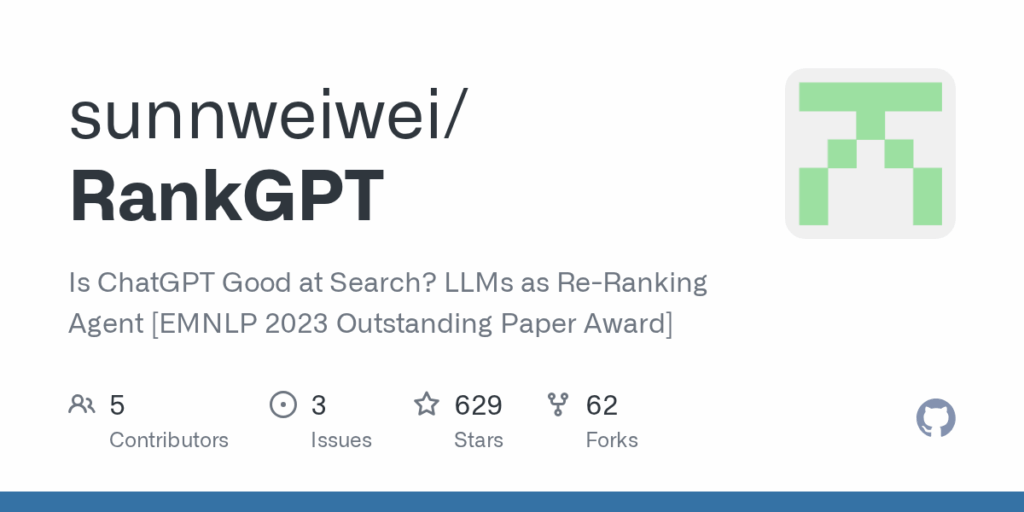

RankGPT

Basic Information

RankGPT is the research codebase accompanying the paper that investigates using large generative language models as re-ranking agents for information retrieval. It provides implementations to generate instructional permutations with LLMs, apply those permutations to re-rank candidate passages, and evaluate re-ranking performance on standard IR benchmarks. The repository includes example code showing how to call the permutation pipeline with models such as ChatGPT, a sliding window strategy to handle long candidate lists beyond token limits, and scripts to run end-to-end retrieval and evaluation using pyserini and trec_eval. It also contains data releases and precomputed ChatGPT permutations for MS MARCO, procedures to distill LLM outputs into compact supervised rankers, and utilities for reproducing the experiments reported in the paper.