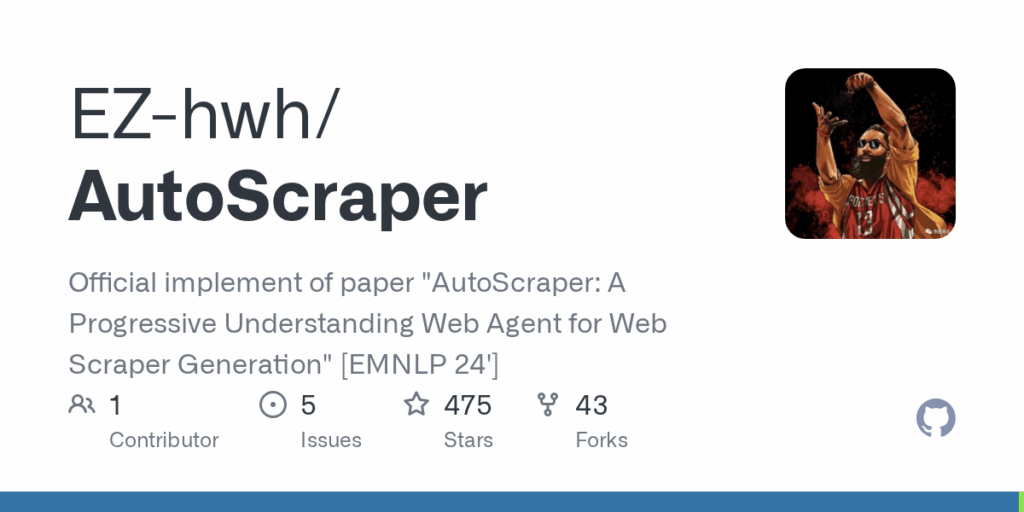

AutoScraper

Basic Information

This repository is the official code release for the paper 'AutoScraper: A Progressive Understanding Web Agent for Web Scraper Generation'. It provides experimental code to automatically generate web scrapers via a progressive understanding agent that leverages large language models. The repo aims to let researchers reproduce the paper's results by running generation, extraction, and evaluation pipelines against the SWDE dataset. Example entry points and documented CLI commands are provided to generate scrapers, perform extraction with generated crawlers, and evaluate extraction quality. The authors note this is experimental work with TODOs to adapt the system to real-world websites and to build a public demo for testing.

Links

Stars

475

Github Repository

App Details

Features

Repository includes scripts and utilities to generate and run model-driven scrapers, notably crawler_generation.py and crawler_extraction.py. It supports configurable patterns referenced in examples such as "reflexion" and "autocrawler", uses the SWDE benchmark for experiments, and demonstrates runs with models named ChatGPT and GPT4. The project provides an evaluation script under run_swde/evaluate.py, a requirements.txt for dependencies, and setup instructions including an optional Conda environment and Python 3.9 recommendation. The README contains example CLI flags like --seed_website, --save_name, --overwrite and highlights assets and figures used in the paper. The codebase is described as experimental and intended for research reproduction.

Use Cases

The repository helps researchers and developers reproduce the paper"s experiments and serves as a starting point for building or evaluating automatic scraper generation approaches. It provides ready-to-run examples to synthesize scrapers, extract structured information, and quantitatively evaluate results on SWDE, which supports comparison and benchmarking. The included scripts and configuration examples illustrate how to wire LLMs into a scraper-generation pipeline and how to vary patterns and model choices. Because the code is experimental, it is useful for prototyping, extending to real-world sites, and adapting or benchmarking new model-driven scraping techniques rather than as a production-ready scraper.