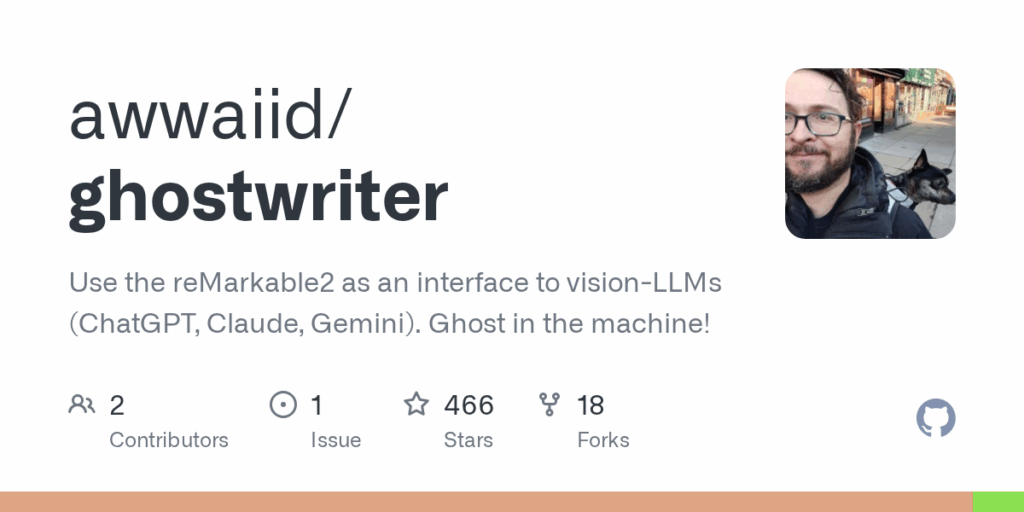

ghostwriter

Basic Information

Ghostwriter is an experimental reMarkable tablet application that watches what you write on the screen and, when triggered by a gesture or on-screen content, has a vision-capable LLM write or draw back onto the device. It integrates screenshot capture, optional image segmentation, and tool-based outputs so the assistant can respond either as typed keyboard text or as SVG/drawn output. The project is built as a standalone binary you deploy to a reMarkable2 or reMarkable Paper Pro and supports multiple backends and engines. It is intended as a practical exploration of handwriting-plus-screen interactions and tools for combining vision models with device input and output rather than a polished consumer app.

Links

Stars

466

Github Repository

App Details

Features

Touch and gesture trigger to start an assist cycle and visual feedback drawn on the page. Support for multiple LLM backends including OpenAI (ChatGPT), Anthropic (Claude), Google Gemini and Groq/local Vision-LLMs like Ollama. Tool system with draw_text and draw_svg modes so responses can be emitted to the virtual keyboard layer or rendered as vector drawings. Optional image segmentation preprocessing to improve spatial placement. CLI with flags for model/engine selection, background running, saving screenshots and model output, and evaluation helpers. Build and deploy instructions for cross-compiling to ARM targets, uinput kernel module handling for simulated keyboard, and a prompt/tool library to customize behaviors.

Use Cases

Ghostwriter lets reMarkable users and developers augment pen-and-paper workflows by adding LLM-driven responses directly on the device. It can take a handwritten question or sketch, run it through a vision-capable model, and return either typed text (via a virtual keyboard) or drawn output, which is useful for tasks like math answers, diagram or sketch generation, extracting todos, and prototyping interactive on-device assistants. The repository includes evaluation tooling to record inputs and outputs, experiment with segmentation and model choices, and iterate on prompts and tools, making it practical for researchers and tinkerers experimenting with V-LLM interactions on e-ink hardware.