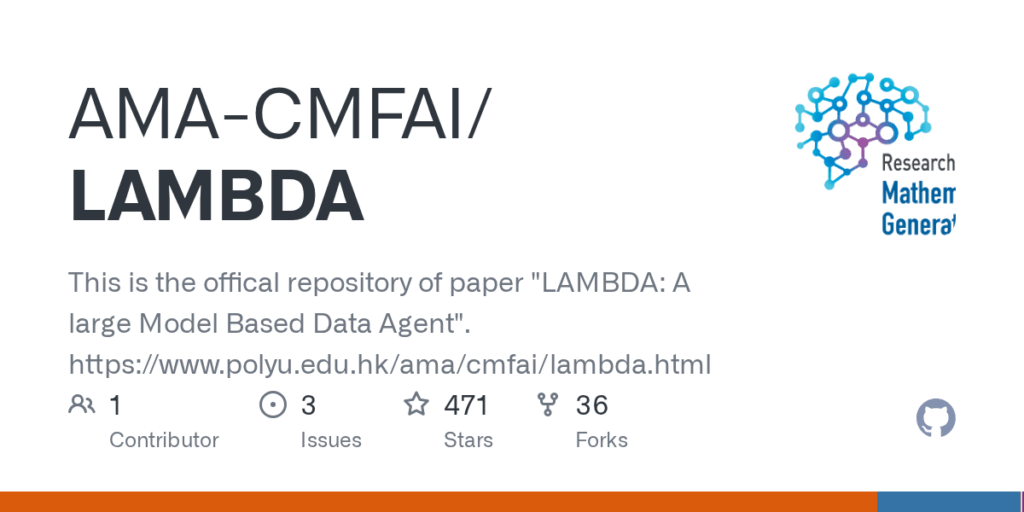

LAMBDA

Basic Information

LAMBDA is an open-source, code-free multi-agent data analysis system that leverages large language models to perform complex data analysis via natural language instructions. It is designed to let users and researchers run iterative generative workflows without writing code by orchestrating specialized data agents. The system emphasizes human-in-the-loop operation through a user interface that permits direct intervention while two complementary agent roles, the programmer and the inspector, generate and debug analysis code. The repository provides installation and configuration guidance including Conda environment setup, dependency installation, an IPython kernel for a local code interpreter, and a config.yaml to set API keys, models and runtime options. It supports exporting reproducible Jupyter Notebooks, integrates external models or local LLM backends, includes demo case studies and documentation, and is released under the MIT License.