inferable

Basic Information

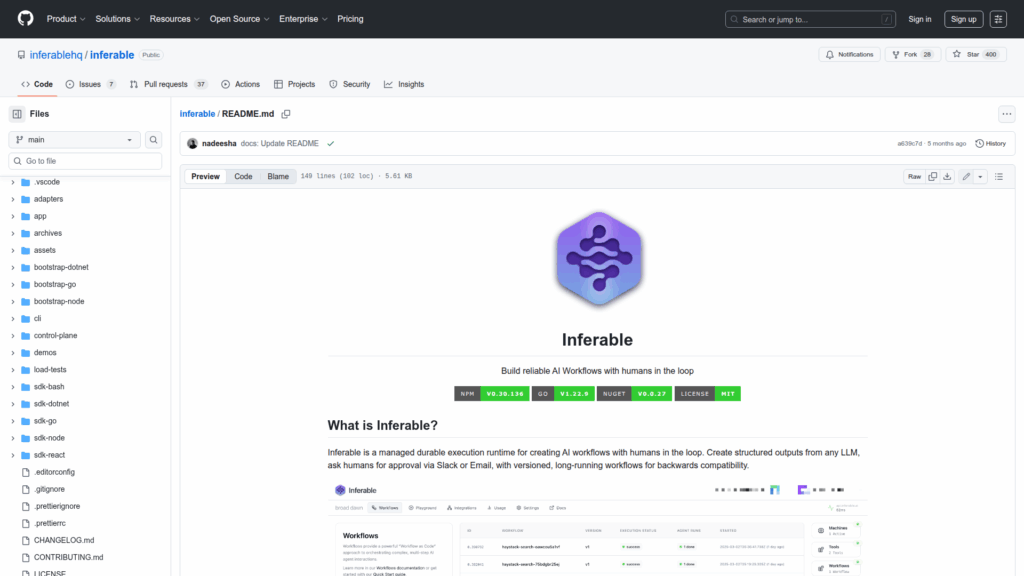

Inferable is an open source, managed durable execution runtime and control plane for building AI-driven workflows and human-in-the-loop processes. It provides a platform where developers define versioned workflows that run in their own infrastructure, enabling long-running, stateful executions with version affinity. The repository contains the core control-plane services, a web management console and playground, a command line tool, and SDKs for Node.js, Go and experimental .NET to integrate workflows into applications. Typical uses include creating structured outputs from large language models, asking humans for approvals via email or Slack, caching expensive side effects, and observing workflow timelines. The project emphasizes self-hosting, security, and backward compatibility so teams can retain control of data and models while deploying robust AI workflows across private networks.