Basic Information

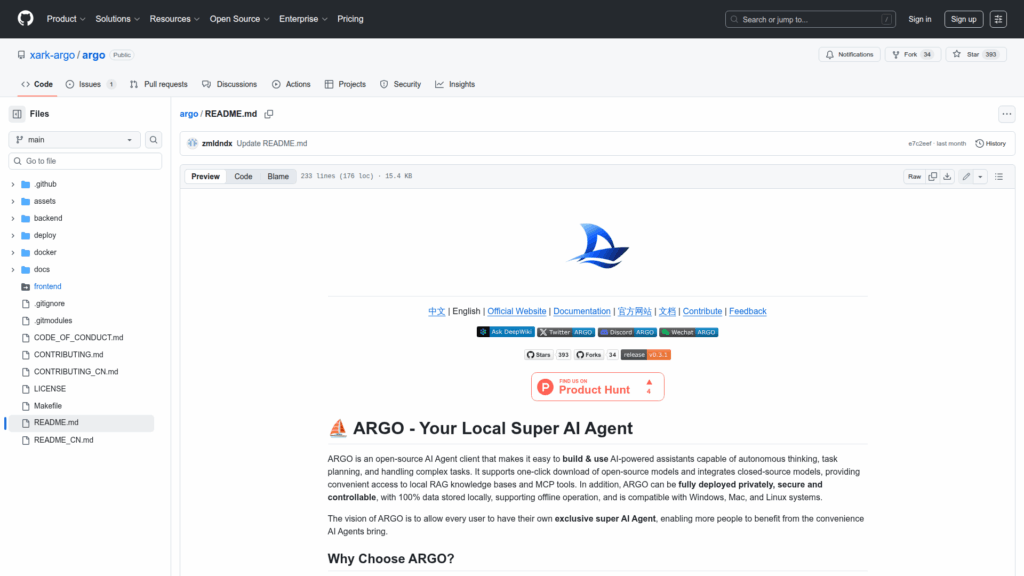

ARGO is an open-source AI Agent client designed to make it easy to build and use AI-powered assistants capable of autonomous thinking, task planning, and multi-stage execution. The project emphasizes a local-first approach so users can run agents privately and offline with all data stored locally. It supports integration with both open-source and closed-source models, including one-click model downloads and Ollama integration, and is compatible with APIs that follow the OpenAI format. ARGO provides a Multi-Agent task engine, local RAG knowledge base support, MCP protocol tooling, and a visual Agent Factory to customize scenario-specific assistants. The software targets desktop and server environments and offers native clients for Windows, macOS, and Linux as well as Docker deployment options for CPU and GPU environments.