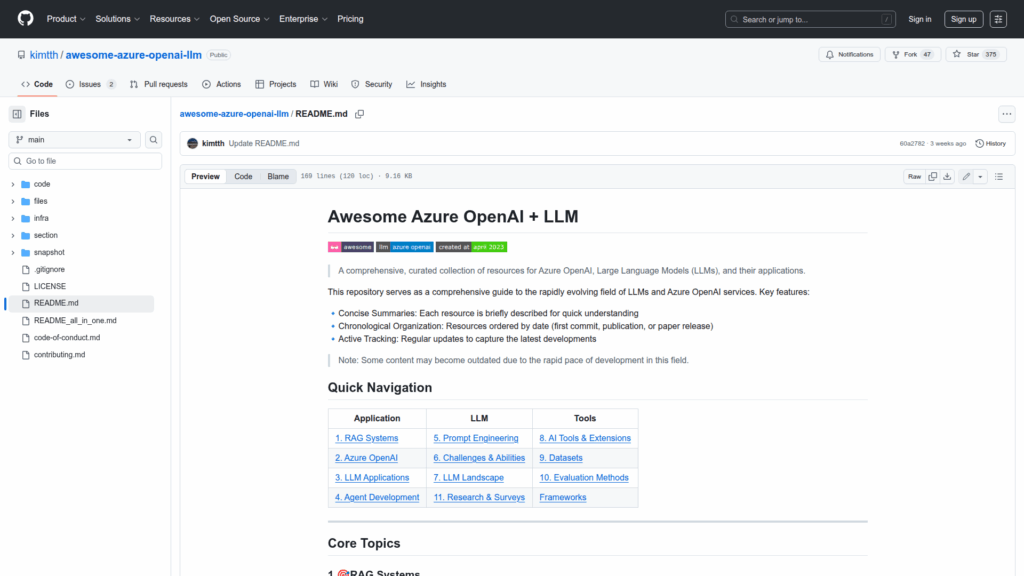

awesome-azure-openai-llm

Basic Information

This repository is a curated collection and reference guide for Azure OpenAI, large language models (LLMs), and related applications. It aggregates resources across core topics such as Retrieval-Augmented Generation (RAG), Azure OpenAI platform details, LLM application development, autonomous agent design, prompt engineering and fine-tuning, model limitations and safety, and the broader LLM landscape. The README organizes content chronologically and provides concise summaries to help readers quickly understand each resource. It is intended to help practitioners, researchers, and developers find authoritative guides, architectures, tooling comparisons, and research surveys relevant to building, evaluating, and deploying LLM-based systems on Azure and with popular open-source frameworks. The repository is actively maintained and notes that the field evolves rapidly, so some entries may become outdated over time.