Basic Information

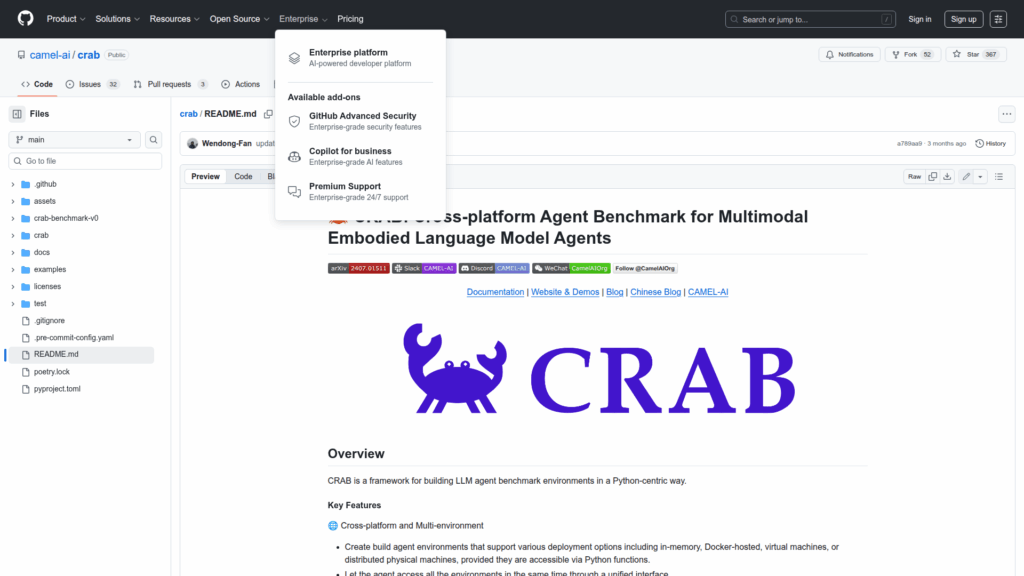

CRAB is a Python‚Äëcentric framework and benchmark suite designed to build, run, and evaluate language‚Äëmodel driven agents across multiple types of environments. It targets researchers and developers who need a reproducible way to create agent environments, define tasks, and measure agent behavior in in‚Äëmemory setups, Docker containers, virtual machines, or distributed physical machines so long as they are accessible via Python functions. The repository includes a benchmark collection (crab-benchmark-v0) with datasets and experiment code, example scripts that run template environments with OpenAI models, installation instructions, a demo video, and a reference paper on arXiv. CRAB emphasizes a unified interface to let an agent access different environments concurrently and supports multimodal embodied language model agents.