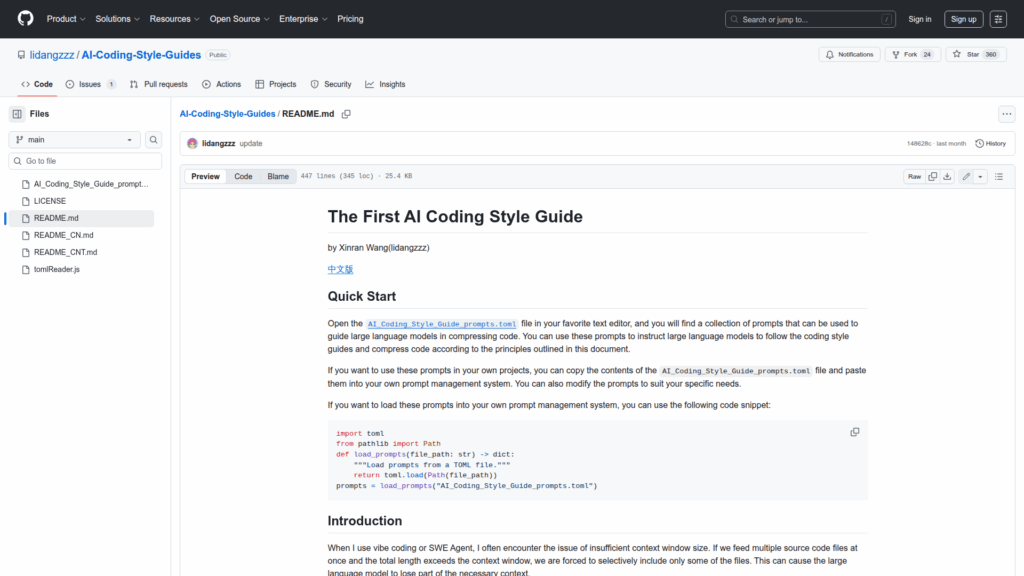

AI-Coding-Style-Guides

Basic Information

This repository is a practical collection of coding style guides and ready-to-use prompts designed to compress source code for use with large language models, SWE Agents, and vibe coding workflows. It provides general rules and language-specific guidance to reduce whitespace, shorten identifiers, and refactor code so that more files and context fit within an LLM context window and to lower token costs. The repo includes a prompts file in TOML format, example compression pipelines, and stepwise examples in TypeScript and C++ demonstrating progressive compression stages. It is targeted at developers, prompt engineers, and teams who want reproducible, systematic approaches to balance compactness with maintainability when using AI-driven coding tools.