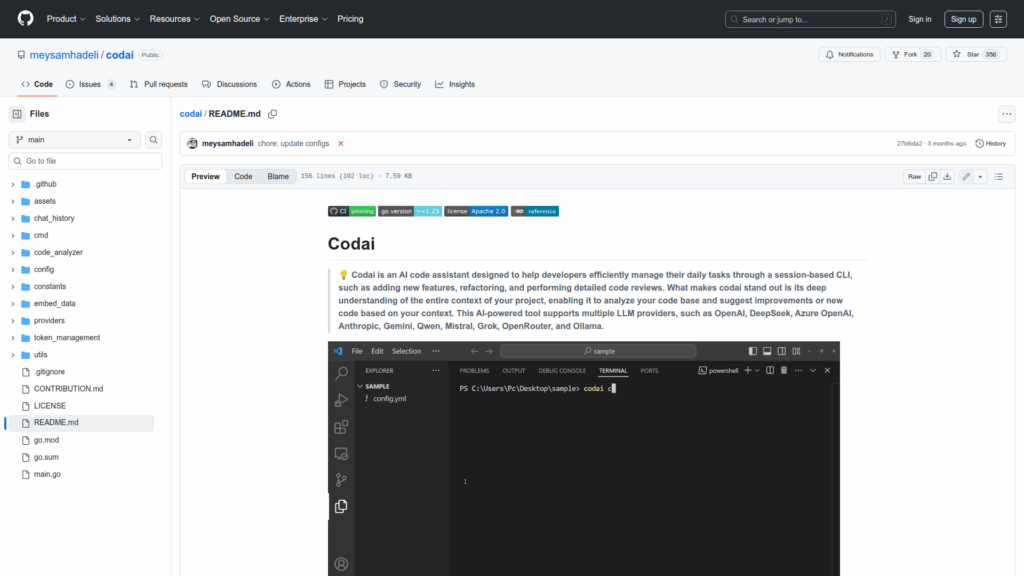

codai

Basic Information

Codai is an AI-powered code assistant delivered as a session-based command-line tool for developers. It is designed to analyze a project's full context and help with everyday development tasks such as adding features, refactoring, writing tests, performing detailed code reviews and suggesting bug fixes. The tool summarizes project context using Tree-sitter so it can send concise signatures rather than full implementations to LLMs and retrieve full sections on demand to save tokens. Codai supports multiple LLM providers and models, allows zero-setup usage via an API key, and can be configured per project with a codai-config.yml file. It also provides a .codai-gitignore to exclude files from analysis. The repository includes installation instructions using go install and guidance for both cloud and local model usage.