invariant

Basic Information

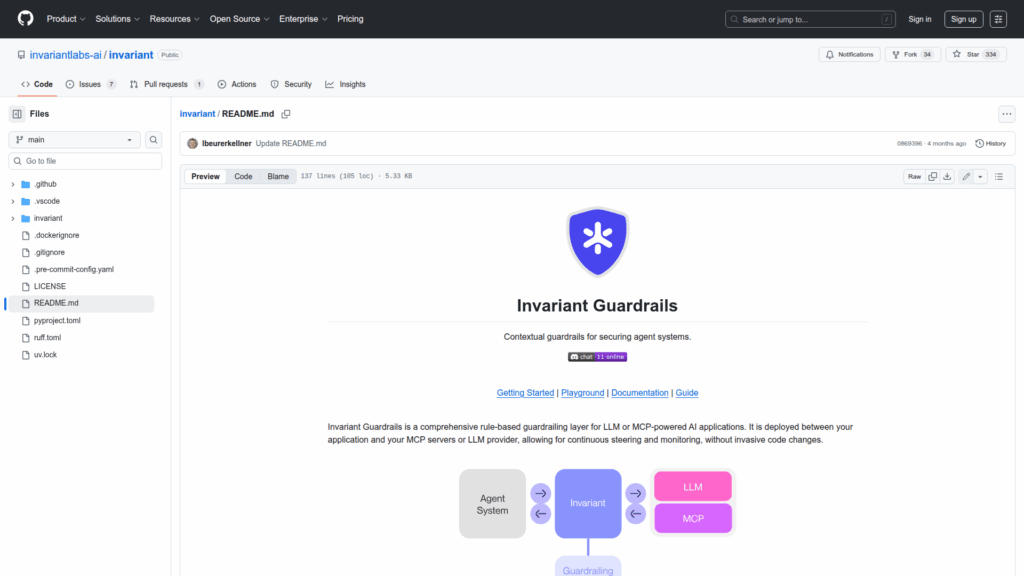

Invariant Guardrails is a rule-based guardrailing layer designed to secure LLM and multi-component platform (MCP) powered AI applications. It operates between an application and its LLM or MCP servers as a proxy that continuously steers, inspects and monitors requests and tool calls without requiring invasive changes to application code. The project provides a Python-inspired domain specific language for writing matching rules that identify and prevent unsafe or malicious agent behaviors by inspecting message traces and function/tool invocations. It can be deployed via a Gateway integration that evaluates rules on each request, or used programmatically via the invariant-ai package to run policies locally or via the Invariant Guardrails API. The repository includes example rules, detectors such as prompt injection checks, a standard library of operations, documentation and a playground for testing rules.