Basic Information

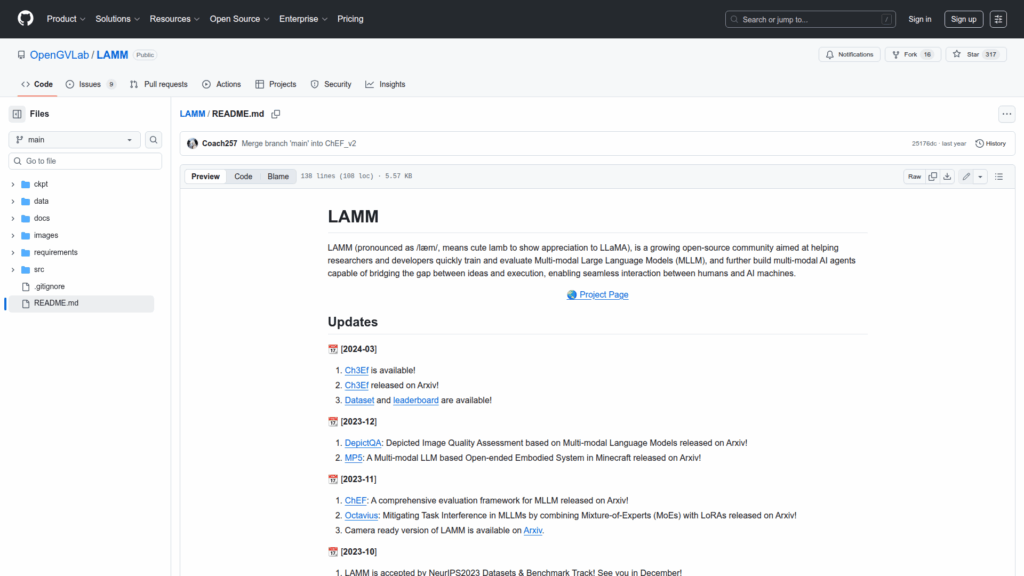

LAMM is an open-source project and community designed to help researchers and developers train, evaluate, and build multi-modal large language models and multi-modal AI agents. The repository aggregates datasets, benchmarks, evaluation tools, example training code, and demos to support research into MLLMs and agent-style applications. It provides resources for both 2D and 3D tasks, links to published papers and preprints describing the datasets and evaluation frameworks, and a project page and tutorial for getting started. The README highlights model checkpoints and dataset releases on Hugging Face, a demo deployment, and a public leaderboard, aiming to bridge research ideas and execution for multi-modal AI systems.

Links

Stars

316

Language

Github Repository

App Details

Features

The repo bundles a variety of research assets and tooling. It publishes datasets and benchmarks for multimodal tasks, evaluation frameworks such as ChEF and Ch3Ef, and task-specific contributions like Octavius, DepictQA, and MP5. It provides evaluation code for 2D and 3D tasks, command-line demo tools, a tutorial site, project web pages, checkpoints and leaderboards hosted externally, and mentions a light training framework optimized for mid-range GPUs and LLaMA2 fine-tuning support. Documentation and citation information for associated papers are included to help reproduce experiments and compare model performance across standardized benchmarks.

Use Cases

LAMM helps researchers and developers by centralizing datasets, benchmarks, evaluation protocols, and example training and demo code for multimodal LLM research. Teams can use the provided resources to train and fine-tune models, evaluate performance on standardized 2D and 3D tasks, reproduce published experiments, and compare results on public leaderboards. The tutorial and demo lower the barrier to experimentation while the paper list and citations facilitate academic use. The license restricts outputs to non-commercial research use, so the repo is primarily useful for academic and research development rather than commercial deployment.