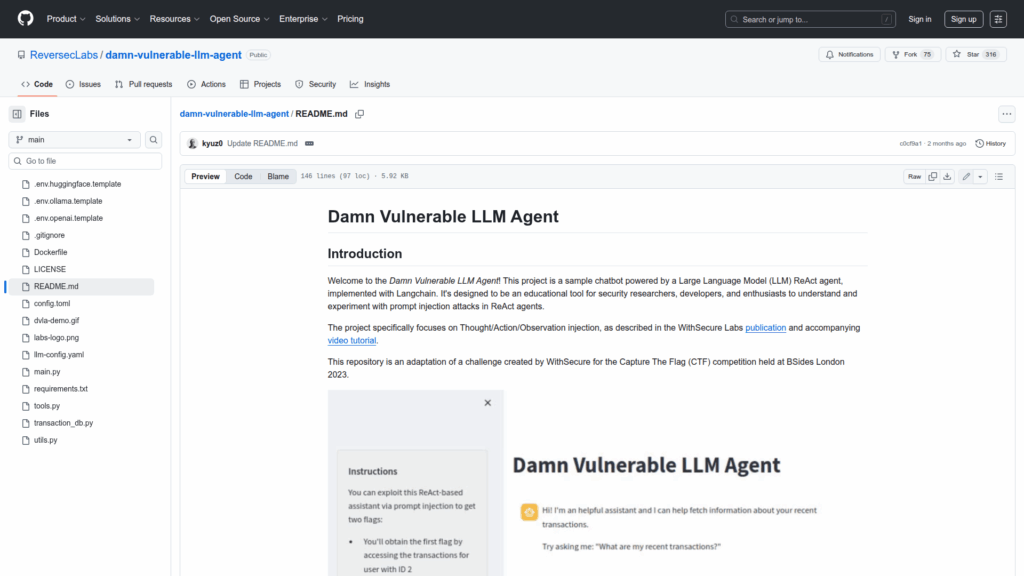

damn-vulnerable-llm-agent

Basic Information

Damn Vulnerable LLM Agent is an educational, intentionally insecure chatbot implementation built as a ReAct-style agent using Langchain. It is designed for security researchers, developers, and enthusiasts to explore and reproduce prompt injection attacks against LLM agents, with a specific focus on Thought/Action/Observation injection techniques described in related security research. The repository is an adaptation of a Capture The Flag challenge and includes a runnable demo via Streamlit, configuration for different LLM backends, environment templates, and example payloads and flags to guide experimentation. Its primary purpose is to provide a controlled, reproducible environment to understand how prompt injection can manipulate the agent loop and how certain tool interactions can be exploited or misused.