llama-github

Basic Information

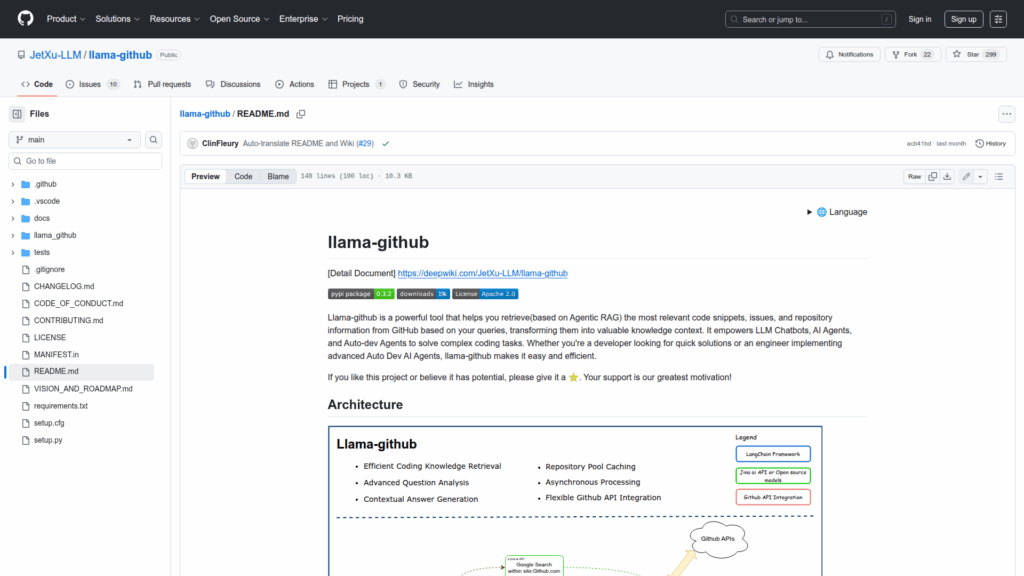

llama-github is a Python library designed to retrieve and synthesize relevant GitHub content to provide context for large language models and AI agents. It focuses on Agentic RAG workflows that extract code snippets, issues, READMEs, repository structure and other repository information in response to developer queries. The project is intended for engineers building LLM chatbots, AI agents and automated development assistants who need repository-aware context to solve complex coding tasks. It provides a programmatic interface illustrated by a GithubRAG class that accepts GitHub credentials and optional LLM or embedding API keys. The package is installable via pip and includes architecture diagrams, usage examples and a roadmap to guide integration into production agent stacks.