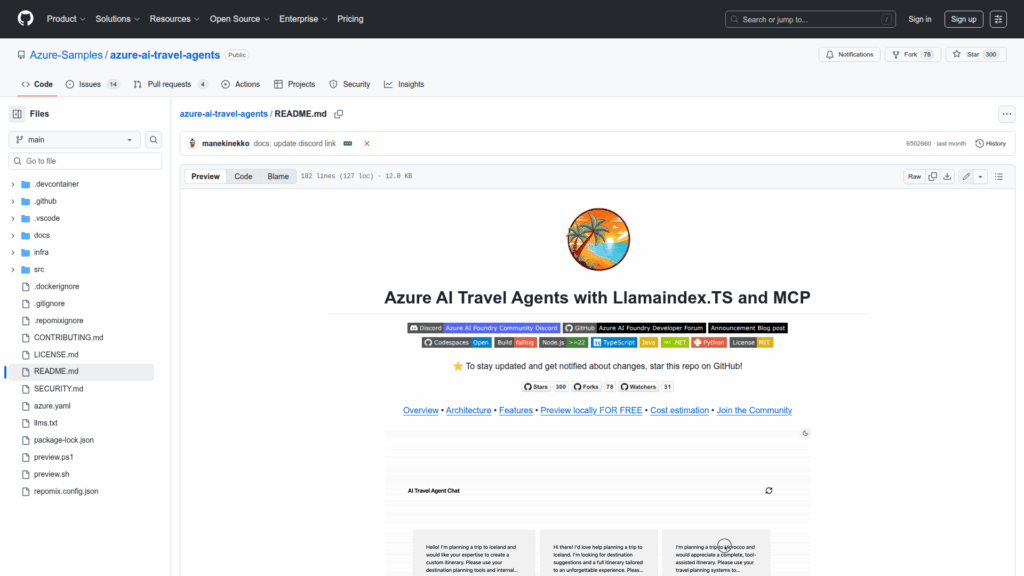

azure-ai-travel-agents

Basic Information

This repository is an enterprise-grade sample application that demonstrates how to build and run a multi-agent travel assistant using LlamaIndex.TS and the Model Context Protocol (MCP). It provides a deployable reference architecture showing multiple specialized agents for travel workflows such as customer query understanding, destination recommendation, itinerary planning, code evaluation, model inference and web search. MCP servers implemented in Python, Node.js, Java and .NET expose tool capabilities to agents. All components are containerized with Docker and designed to run serverlessly on Azure Container Apps or locally via Docker Model Runner. The project includes orchestration services, monitoring via an Aspire Dashboard with OpenTelemetry integration, an llms.txt file to aid LLM inference, a one-step preview setup script for local testing, advanced setup documentation, deployment instructions using azd up, and cleanup guidance for Azure resources.