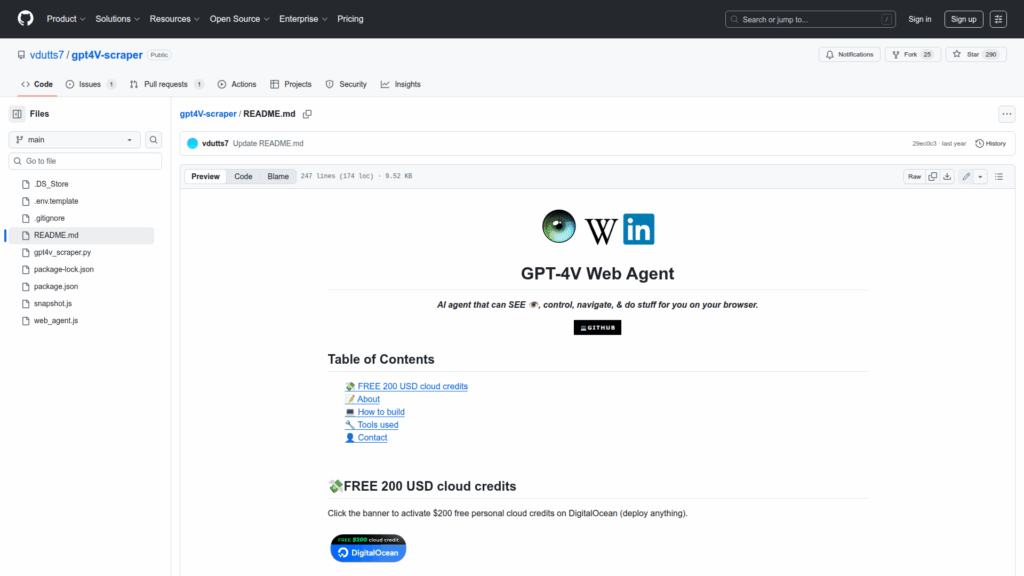

gpt4V-scraper

Basic Information

This repository provides a working web agent that automates full-page web captures, scraping, and image-based content extraction using GPT-4V. It combines Puppeteer (with a stealth plugin) to drive a real desktop Chrome/Chrome Canary browser session for screenshots and navigation, plus Python code that sends the captured images to the GPT-4V API for image-to-text extraction. The project includes node scripts for taking snapshots and a realtime interactive web agent that can perform guided browsing and Bing searches. Configuration relies on a .env file with an OpenAI API key and local browser paths and user data directories to support authenticated pages. Example commands and usage scenarios are included for capturing snapshot.jpg and receiving structured text output in the console.