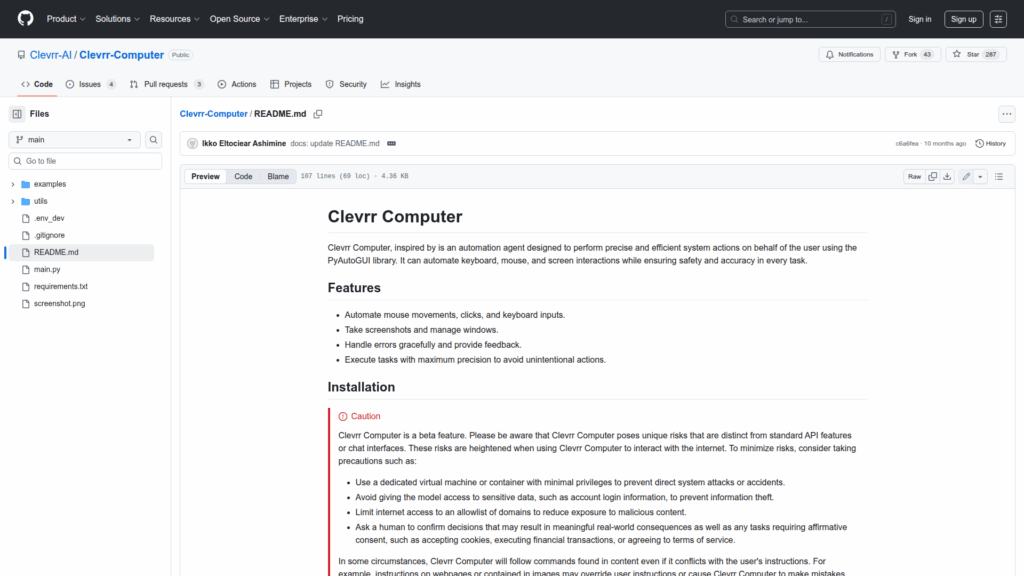

Clevrr-Computer

Basic Information

Clevrr Computer is a desktop automation agent that performs precise system actions on behalf of a user by combining Python automation and multimodal language models. It uses PyAutoGUI to simulate mouse movements, clicks, keyboard inputs and window management while continuously capturing screenshots to interpret the on-screen context. The agent creates a chain-of-thought plan for tasks, queries the screen via a get_screen_info tool, and executes code-driven actions through a PythonREPLAst tool. The repository includes a runnable application with a floating TKinter interface, command-line flags to choose models (gemini or openai) and configuration via environment variables. The README emphasizes safety, advising use in isolated VMs or containers and restricting internet and sensitive data access to reduce risk from prompt injection or unintended real-world effects.