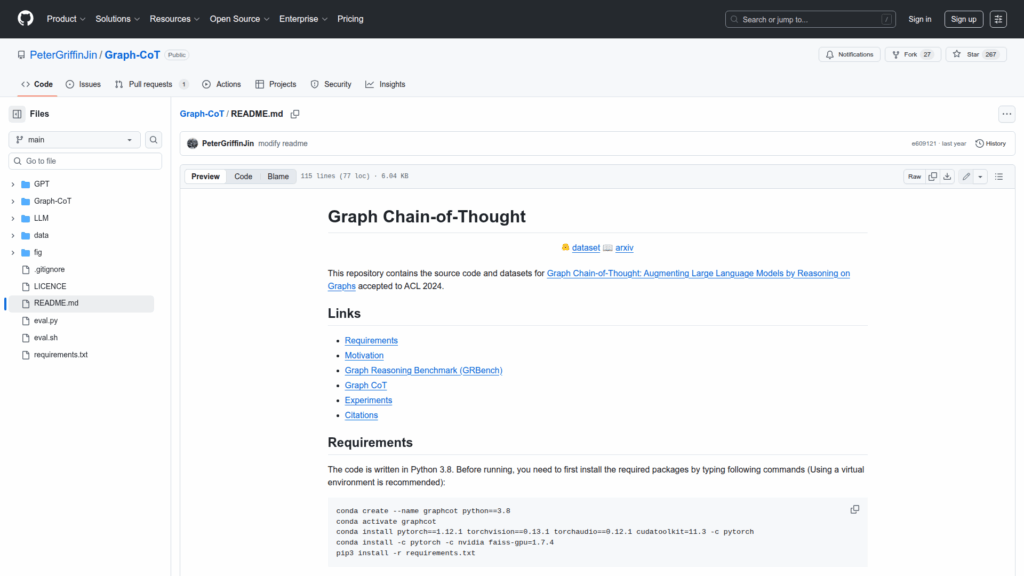

Graph-CoT

Basic Information

This repository contains the source code, datasets, and experimental scripts for Graph Chain-of-Thought (Graph-CoT), a method and ACL 2024 paper that augments large language models by enabling stepwise reasoning on text-attributed graphs. It provides the Graph-CoT implementation and the Graph Reasoning Benchmark (GRBench) with ten real-world graphs across academic, e-commerce, literature, healthcare, and legal domains. The repo includes instructions for environment setup and dependencies, data placement conventions for processed graphs and question-answer sets, and guidance for running Graph-CoT and related baselines. It groups model code into folders for Graph-CoT, open-source LLM baselines, and GPT variants, and is intended to support reproducible research and experimentation on augmenting LLMs with structured graph knowledge.