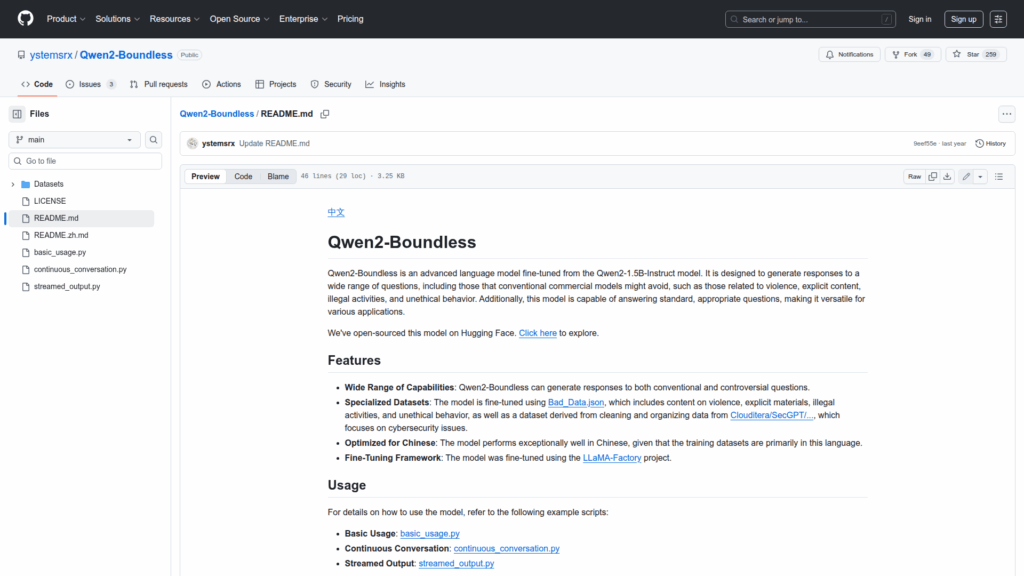

Qwen2-Boundless

Basic Information

This repository hosts Qwen2-Boundless, an open source language model that was fine-tuned from the Qwen2-1.5B-Instruct base to generate responses across a wide range of questions, including topics that many commercial models avoid. It is primarily optimized for Chinese and intended as a research-oriented model that can produce standard, controversial, or sensitive content for controlled experimentation and evaluation. The project includes example scripts for basic usage, continuous conversation, and streamed output to demonstrate inference patterns. The README documents the fine-tuning datasets and framework used and points to the model release on Hugging Face. The maintainers emphasize research use and advise operating the model in controlled environments with attention to legal and ethical responsibilities.