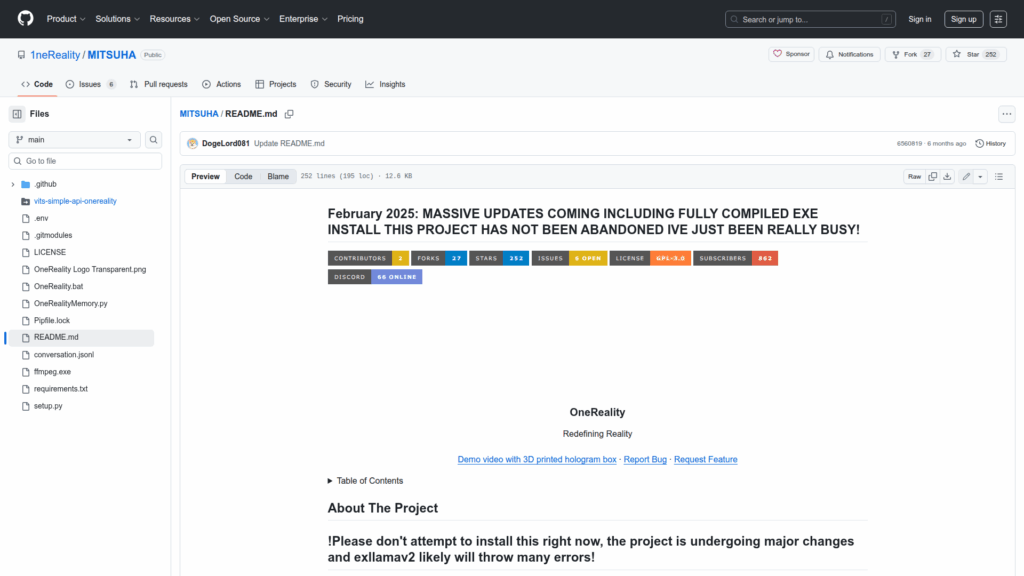

MITSUHA

Basic Information

MITSUHA is a hobbyist project that creates a local, multilingual virtual assistant or 'waifu' you can speak to via microphone and that speaks back via TTS. The system is designed to run on a PC or in VR/AR setups and supports a Gatebox-style hologram, VTube Studio avatar integration, and optional smart home control through Tuya. It stitches together voice input, speech-to-text, contextual memory lookup, local LLM inference, and TTS to produce spoken, context-aware responses. The README emphasizes this is a work in progress with major changes underway and warns users not to attempt installation right now because some model components may error. The project targets enthusiasts who want an interactive, multimodal local assistant with avatar and home automation features.