rag-research-agent-template

Basic Information

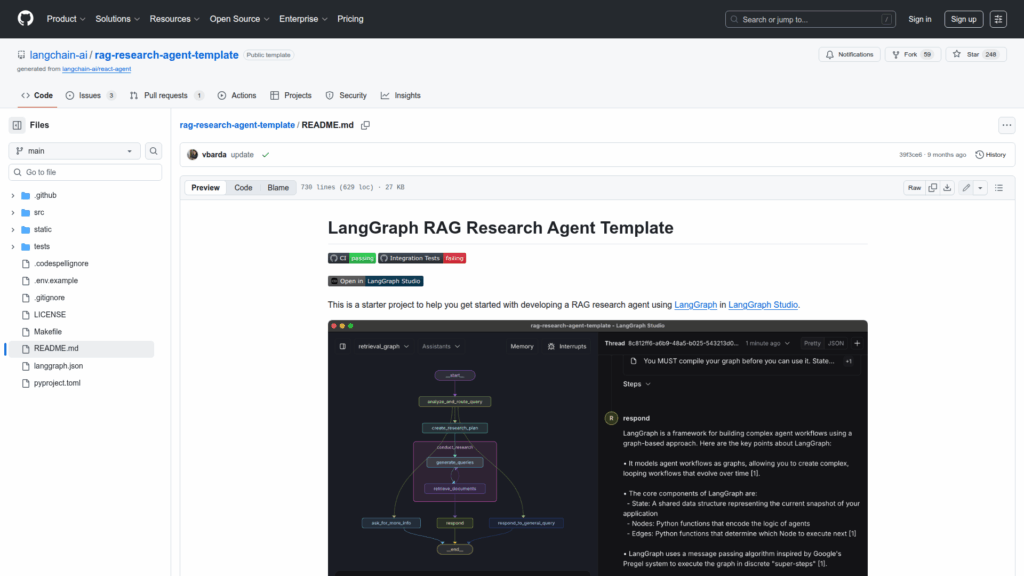

This repository is a starter template for developers to build a retrieval-augmented generation (RAG) research agent using LangGraph and LangGraph Studio. It provides ready-made graph definitions and example workflows so developers can prototype a stateful agent that indexes documents, manages chat history, plans research steps, runs parallel document retrievals, and composes responses from retrieved context. The codebase includes an index graph, a retrieval graph, and a researcher subgraph with source files under src/index_graph, src/retrieval_graph, and src/retrieval_graph/researcher_graph. It also includes sample documents used by the indexer when no input is provided. The repo is intended to be run and edited in LangGraph Studio, configured via a .env file, and integrated with external retrievers, embedding providers, and LLMs for experimentation and extension.