llm-movieagent

Basic Information

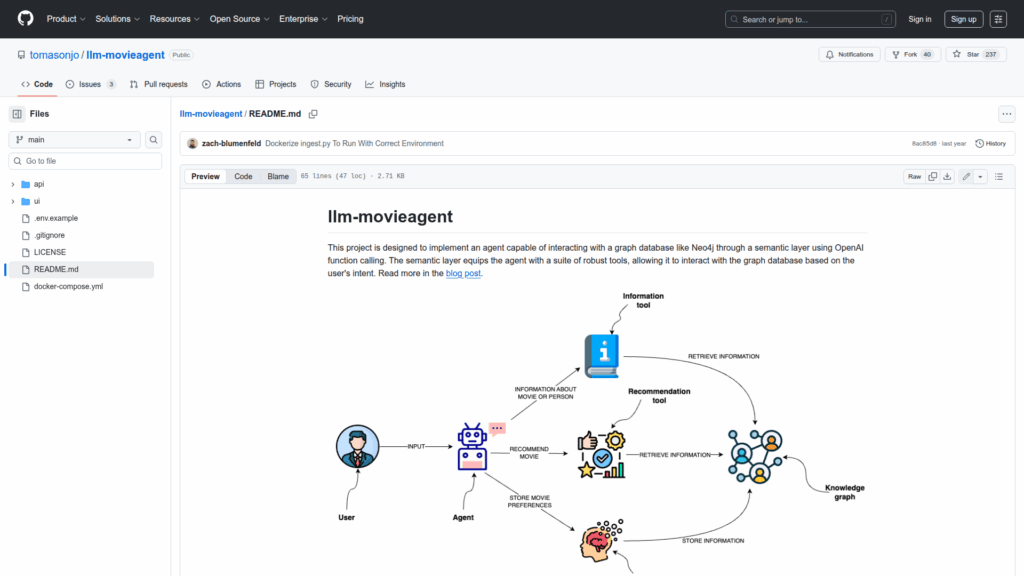

This repository demonstrates and packages an LLM-driven agent that interacts with a Neo4j graph database through a semantic layer and OpenAI function calling. It is designed as a concrete implementation that maps user intent expressed in natural language to graph operations and queries. The project includes a Docker Compose setup to run the Neo4j database, an API that uses a LangChain neo4j-semantic-layer template to connect an OpenAI model to the graph, and a simple Streamlit chat UI for interaction. It also provides an ingest script to populate the database with an example movie dataset and to create fulltext indices for mapping freeform input to graph entities. Environment variables are required for OpenAI and Neo4j credentials.

Links

Stars

235

Language

Github Repository

Categorization

App Details

Features

The project features a semantic layer that translates natural language into function calls and graph queries, enabling reliable interaction between an LLM and Neo4j. It exposes three primary tools: an information tool for retrieving movie and person data, a recommendation tool for personalized movie suggestions, and a memory tool that stores user preferences in the knowledge graph. The codebase is Dockerized with separate services for Neo4j, an API that leverages LangChain templates, and a Streamlit UI at localhost:8501. An ingest.py script imports a MovieLens-based dataset and creates fulltext indices in Neo4j. Configuration is managed via a simple .env file with OpenAI and Neo4j credentials.

Use Cases

This repository is helpful for developers and practitioners who want to prototype or build conversational recommenders or knowledge-driven agents backed by a graph database. It provides a ready-to-run environment that demonstrates how to wire an OpenAI model to graph queries and function calling, simplifying experimentation with natural language-driven graph interactions. The memory tool shows how user preferences can be persisted in the knowledge graph to personalize future responses. The ingest script and Docker Compose setup reduce setup time by providing sample data and containerized services. The included Streamlit UI offers an immediate way to test dialogs and recommendations locally.