Awesome-LLM-Papers-Comprehensive-Topics

Basic Information

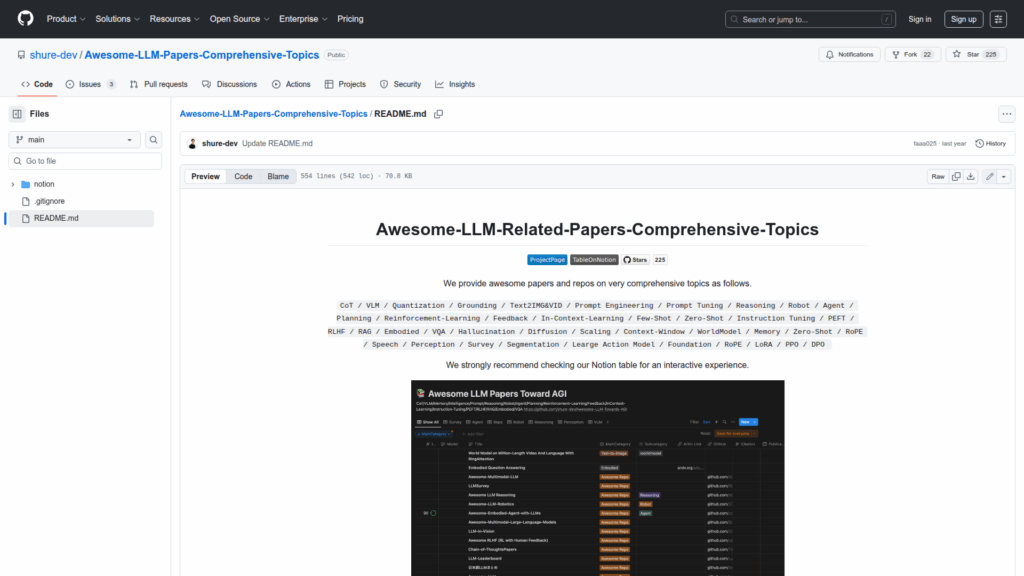

This repository is a curated, continuously updated collection of research papers and open-source repositories related to large language models and multimodal AI. It aggregates titles, links and short metadata for a wide range of subtopics appearing throughout modern LLM research, including vision-language models, agents and planning, robotics, retrieval-augmented generation, instruction tuning, quantization and model compression, reasoning and chain-of-thought, reinforcement learning and RLHF, memory and world models, diffusion-based generation, evaluation and benchmarks, and many surveys and resources. The README serves as the primary index and includes a large tabular list with links to ArXiv and GitHub entries. The repo points users to an interactive Notion table for browsing and highlights many representative packages and labs. The collection size is stated as 516 papers and repos and is intended as a reference hub for staying current in LLM-related literature.