Trinity-RFT

Basic Information

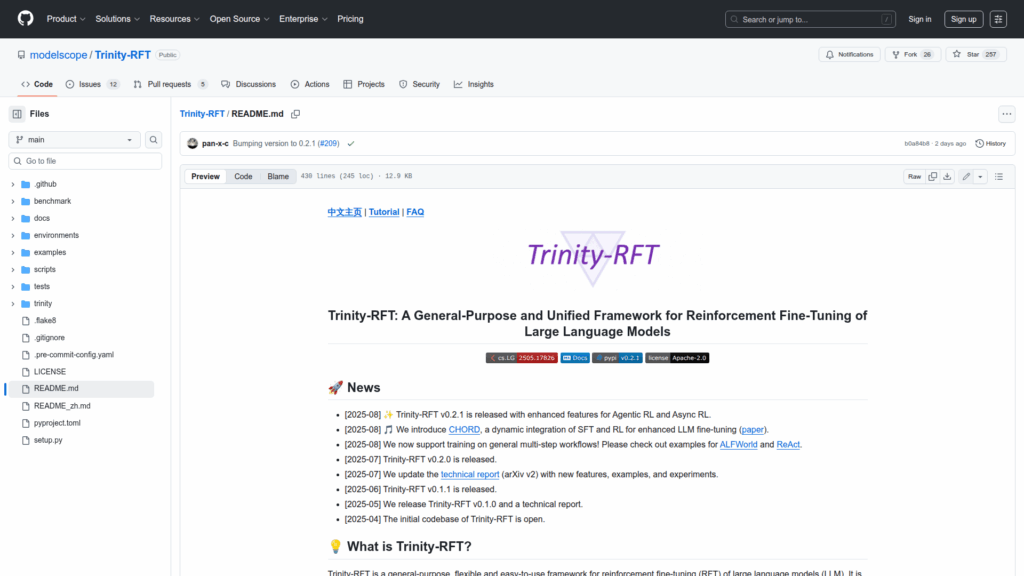

Trinity-RFT is a general-purpose framework for reinforcement fine-tuning of large language models. It provides a unified platform for researchers and developers to design, run, and scale reinforcement learning workflows that adapt LLM behavior to new tasks and interactive scenarios. The repository supports building agent-environment interaction logic in single-step, multi-turn, and general multi-step workflows and includes examples and tutorials for common benchmarks and agentic tasks. It targets experimental and production-style RFT use cases by offering modular components for rollout, training, data processing, and configuration. The project includes a web-based studio for low-code configuration, command-line tools for running experiments, and support for common model and dataset sources to accelerate reproduction and extension of RFT experiments.