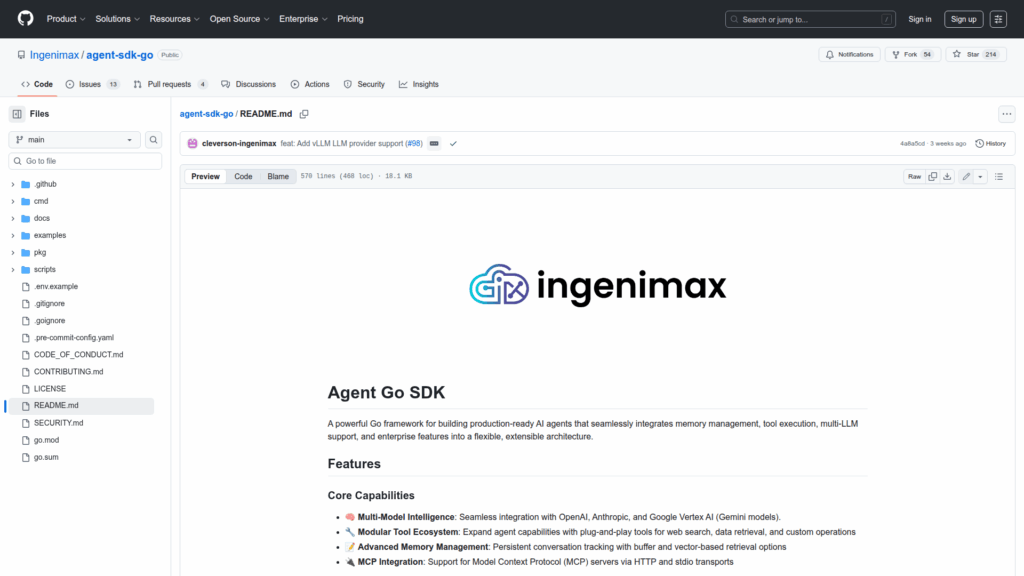

agent-sdk-go

Basic Information

Agent SDK Go is a Go framework for building production-ready AI agents that combines LLM access, memory, tool execution, and enterprise features into a modular architecture. It is designed to let developers create agents that use multiple model providers such as OpenAI, Anthropic, Google Vertex AI, Ollama, and vLLM, and to connect to external Model Context Protocol (MCP) servers. The SDK supports persistent conversation memory with buffer and vector retrieval, optional Redis for distributed memory, declarative YAML agent and task definitions, structured JSON outputs, guardrails for safer deployments, and observability with tracing and logging. The repo includes examples, a CLI wizard, and auto-configuration capabilities that generate agent profiles and tasks from system prompts, so teams can prototype and deploy specialized agents while maintaining multi-tenancy and operational controls.