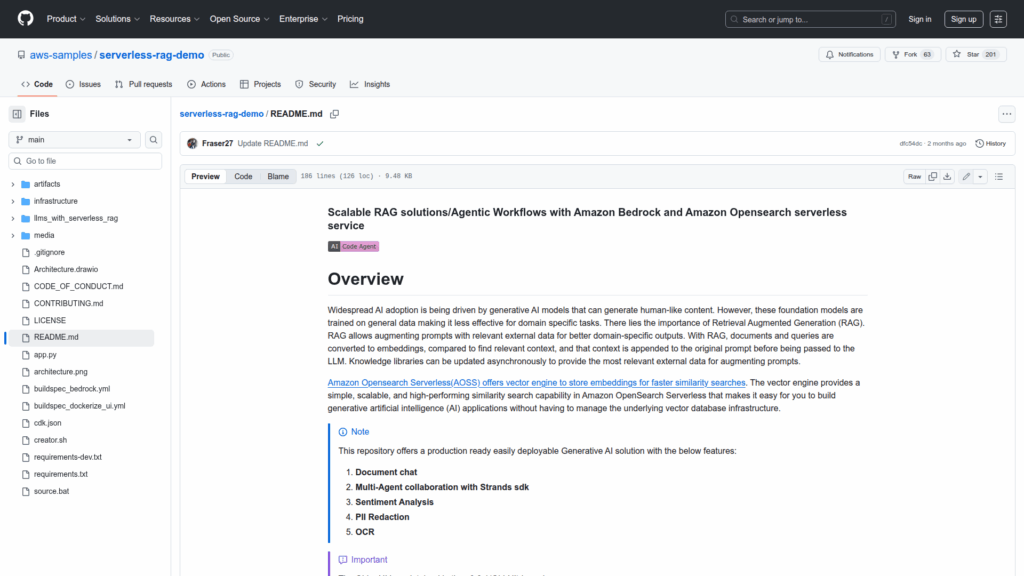

serverless-rag-demo

Basic Information

This repository provides a production-ready, easily deployable serverless Retrieval Augmented Generation (RAG) solution built on AWS services to augment foundation models with domain-specific content. It demonstrates document chat and document management workflows that convert documents and queries into embeddings, perform similarity search using the Amazon Opensearch Serverless vector engine, and append retrieved context to prompts sent to Amazon Bedrock models. The project also includes multi-agent collaboration through the Strands SDK, OCR for ingesting documents, PII redaction, sentiment analysis, and a document-aware chunking strategy for cross-document comparison. The README documents a guided CloudShell-based deployment using a creator script, an AppRunner-hosted UI protected by Amazon Cognito, Lambda functions and API Gateway, and instructions to integrate with existing Bedrock knowledge bases and embedding models. The codebase targets AWS practitioners who want an end-to-end serverless RAG demo configured for Anthropic Claude and Cohere embed models.