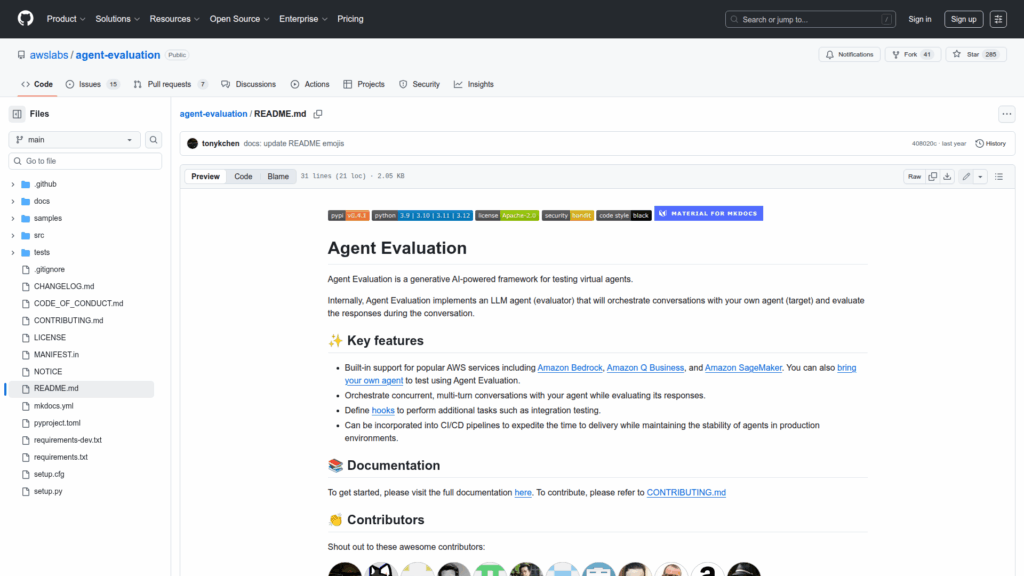

agent-evaluation

Basic Information

Agent Evaluation is a generative AI-powered framework designed to test and validate virtual agents. It provides an LLM-based evaluator that orchestrates multi-turn conversations with a target agent and assesses the agent's responses during those interactions. The project is intended as a developer-focused testing tool that can connect to popular AWS model and hosting services and also accept custom agent targets. It supports concurrent conversations so multiple scenarios can be exercised in parallel, and it exposes hooks to run additional checks or integrations as part of a test flow. The README and documentation describe how to get started, how to add custom targets, and how to incorporate the framework into existing development and deployment workflows for systematic agent validation.