agent service toolkit

Basic Information

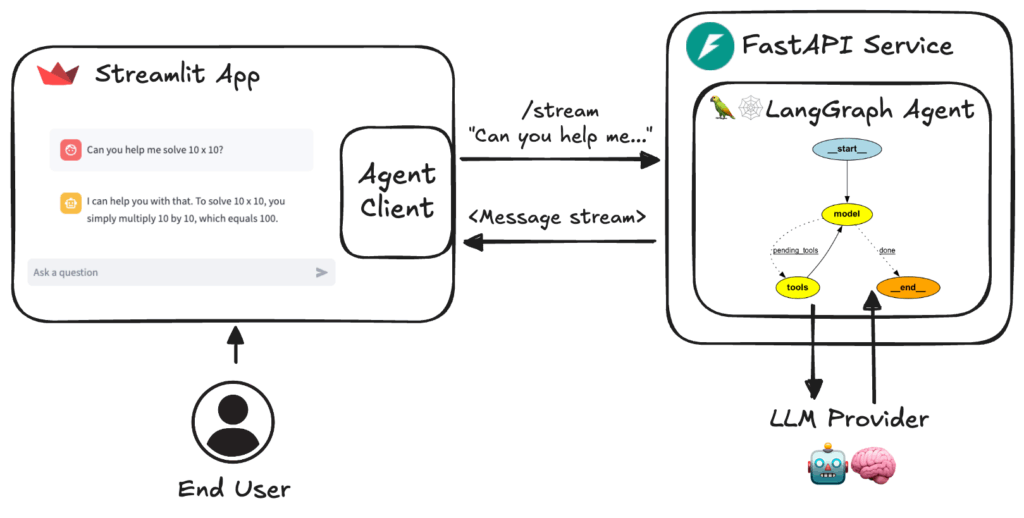

AI Agent Service Toolkit is a template and reference implementation for building, running and deploying LangGraph-based AI agents. It bundles an agent definition, a FastAPI service that exposes streaming and non-streaming endpoints, a Python client for invoking agents, and a Streamlit chat interface. Data models and configuration use Pydantic and the repo includes example agents, a RAG assistant example using ChromaDB, content moderation via LlamaGuard, and integration points for LangSmith tracing. The project provides Docker Compose with a PostgreSQL service for full-stack local development, instructions for running locally without Docker, and guidance for provider-specific setup such as Ollama and VertexAI. The toolkit is intended to be a starting point to customize agents, run multiple agents by URL path, and experiment with LangGraph Studio for interactive agent development.