agentic-memory

Basic Information

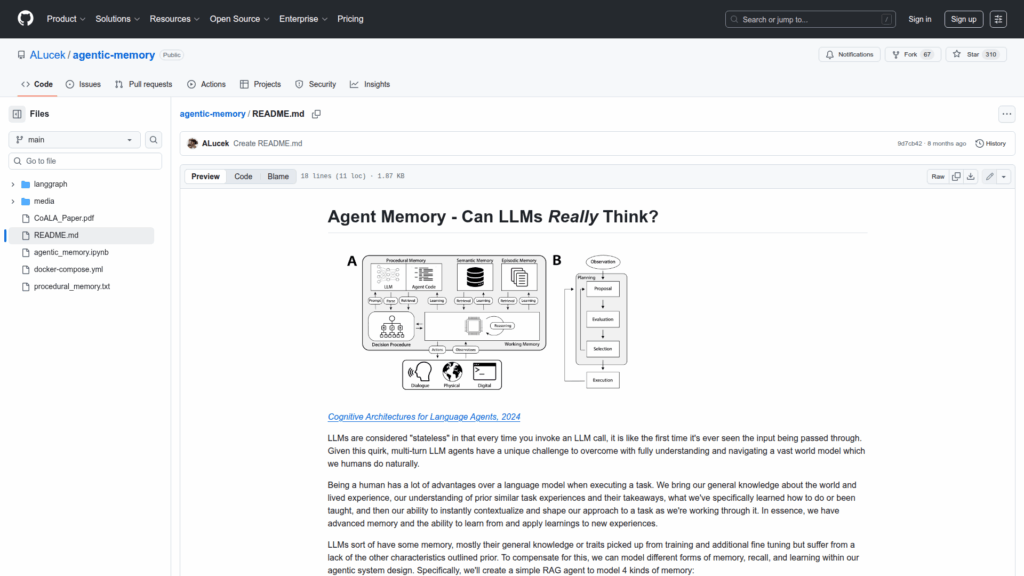

This repository explores how to model memory systems within language-agent architectures to overcome the stateless behavior of large language models. It presents an educational notebook-style treatment that breaks down cognitive memory into four practical components and sketches how to incorporate them into a retrieval-augmented generation (RAG) agent. The project frames agentic memory around human-like Working, Episodic, Semantic, and Procedural memories and discusses their role in multi-turn understanding, contextualization, and learning from past interactions. The material is intended for developers and researchers who want conceptual guidance and a simple example of integrating memory into agent designs rather than a full production framework. It emphasizes cognitive architecture principles for language agents and references recent research on cognitive architectures for language agents as background.