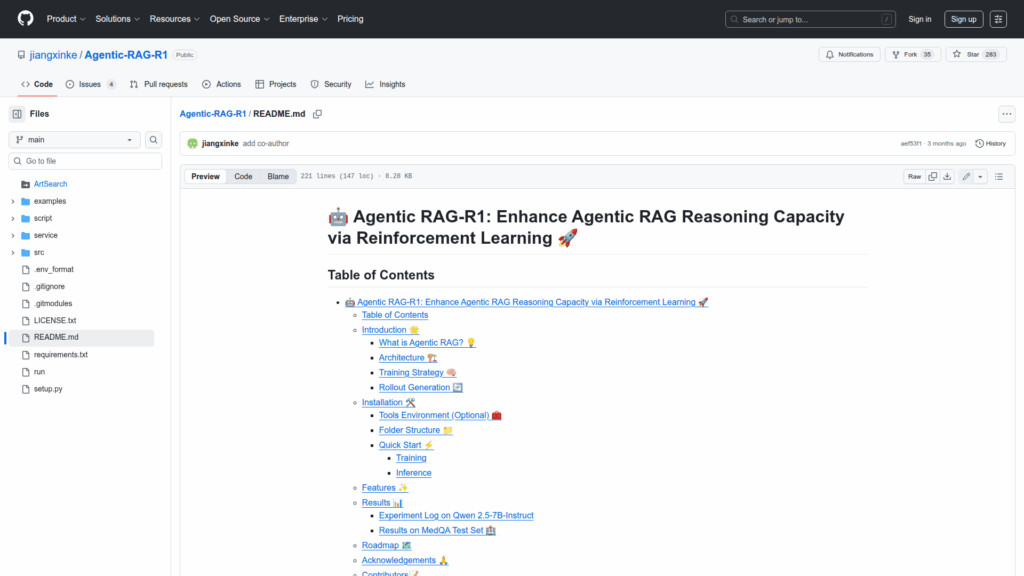

Agentic-RAG-R1

Basic Information

Agentic RAG-R1 is an open-source research and engineering repository that implements an Agentic Retrieval-Augmented Generation system and training pipeline. It is designed to endow a base language model with autonomous search and multi-step reasoning abilities by applying reinforcement learning, specifically the GRPO algorithm. The project provides architecture and code for an agent memory stack that orchestrates planning, reasoning, backtracking, summarization, tool observations and conclusions. The repo contains training and evaluation scripts, example uses, model checkpoints, a chat server and client, and instructions to integrate an external search tool. It is intended for developers and researchers who want to train, evaluate and deploy agentic RAG models and reproduce experiments reported in the README.