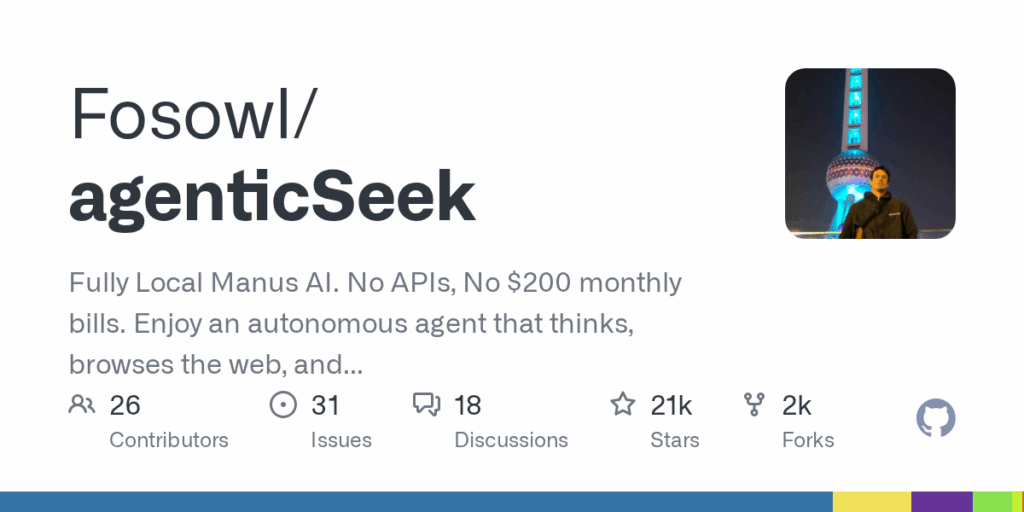

agenticSeek

Basic Information

AgenticSeek is an open-source, user-facing autonomous AI assistant designed as a 100% local alternative to Manus AI. It is built to run entirely on a user's hardware so models, speech, files and browsing actions remain private and do not depend on cloud services. The project bundles a web interface and a CLI, Docker Compose services (frontend, backend, searxng, redis), and an optional self-hosted llm server so users can run LLMs locally with providers such as ollama or LM Studio, or connect to external APIs if desired. The README documents prerequisites, configuration via config.ini, provider and model selection, and how to start services. It targets tasks like autonomous web browsing, code generation and execution, multi-step task planning and file system interaction while prioritizing privacy, local model usage and modular configuration for different hardware capabilities.