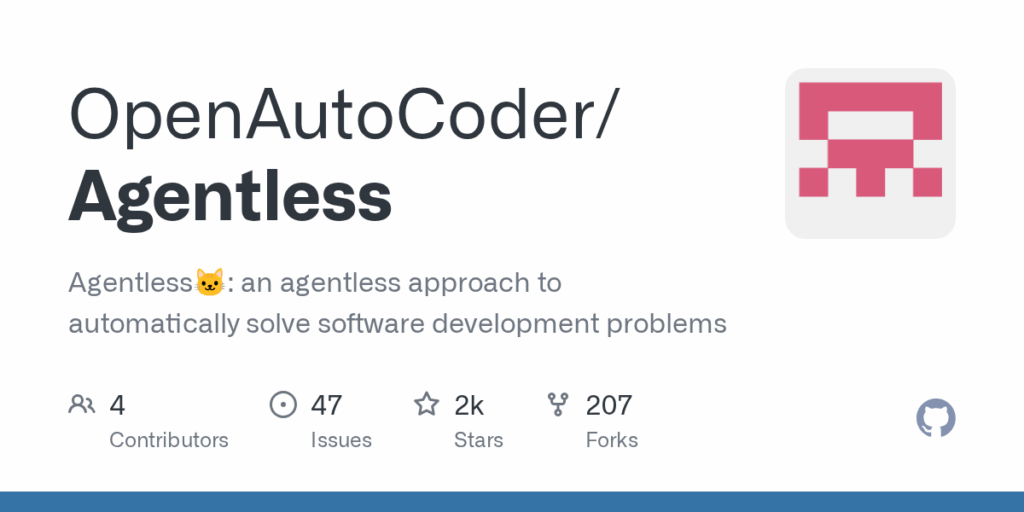

Agentless

Basic Information

Agentless is an open research and engineering project that implements an "agentless" automated approach to solving software development problems, primarily focused on program repair and debugging benchmarks. The repository encodes a three-phase pipeline used to tackle each bug: hierarchical localization to narrow faults from files to functions and edit locations, repair via sampling multiple candidate patches in diff format, and patch validation that selects regression tests and generates reproduction tests to re-rank and choose fixes. The codebase is intended to run experiments on the SWE-bench benchmark suite and reproduce results reported in the associated paper. It provides scripts, dependencies, and instructions to set up a Python environment and requires an OpenAI API key for certain model-driven components. Releases include full artifacts for SWE-bench experiments and the README links to reproducibility details and evaluations.