ai-book-writer

Basic Information

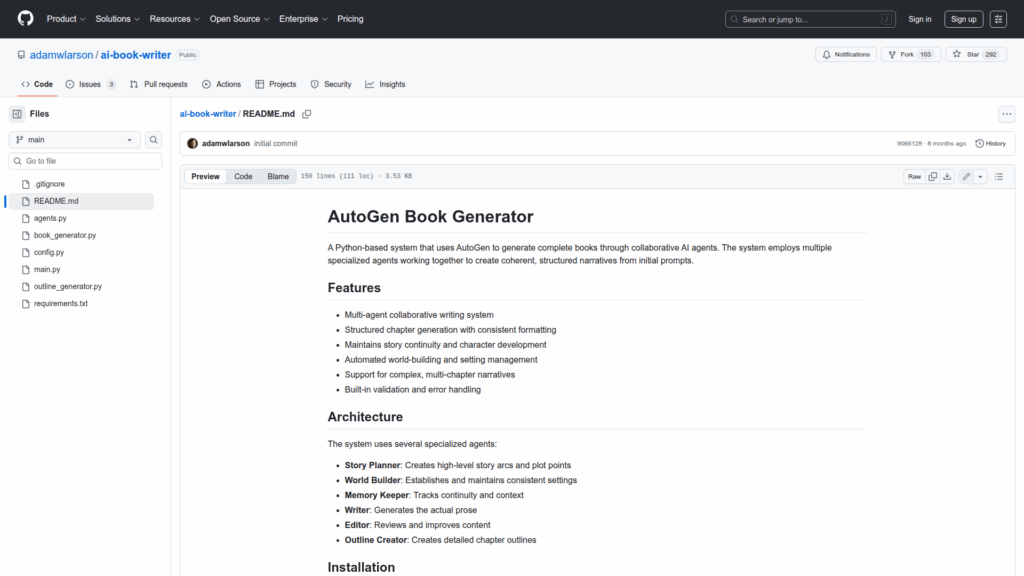

This repository is a Python-based system that uses the AutoGen framework to generate complete books by coordinating multiple specialized AI agents. It provides an example implementation and tooling to plan, outline, write, and edit multi-chapter narratives starting from an initial prompt. The README documents the agent roles, a suggested installation workflow using a virtual environment and requirements file, simple usage examples showing how to create agents and run an outline-to-book generation sequence, configuration options such as LLM endpoint URL and chapter count, and the expected output layout saved into a book_output directory. The project is intended as an experimental generator and developer-facing reference for building collaborative agent pipelines rather than a polished end-user product.