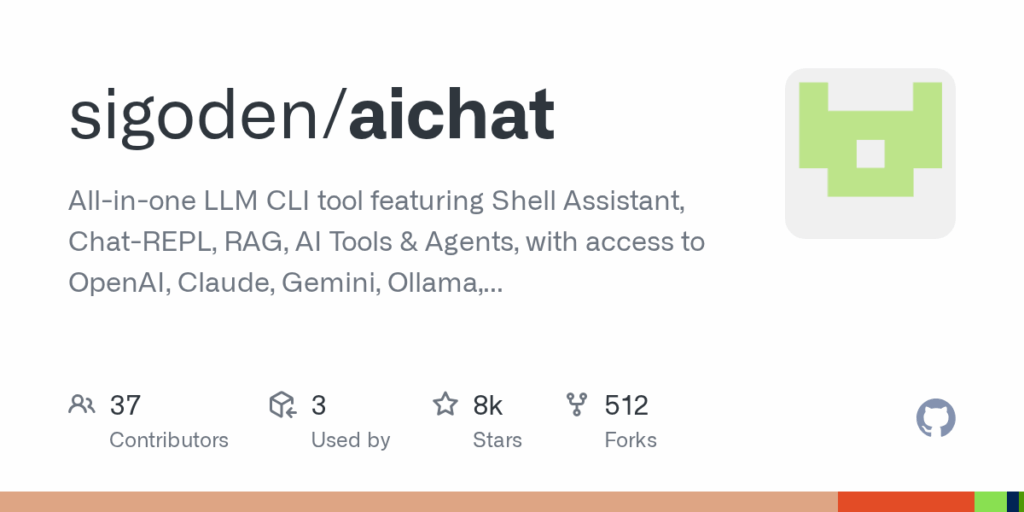

aichat

Basic Information

AIChat is an end-user command line interface that brings large language models to the terminal by combining chat, REPL, shell automation, retrieval augmented generation, tools and agent capabilities into one package. The project is designed to let users interact with many LLM providers through a unified CLI, run an interactive Chat-REPL with history and autocompletion, and convert plain-language tasks into shell commands via the Shell Assistant. It also supports sessions to maintain conversational context, macros to automate repetitive REPL sequences, and a lightweight built-in HTTP server exposing chat and embeddings endpoints plus a web playground and model comparison arena. The repository includes installation instructions for multiple package managers and prebuilt binaries and is licensed under MIT or Apache 2.0.