arbigent

Basic Information

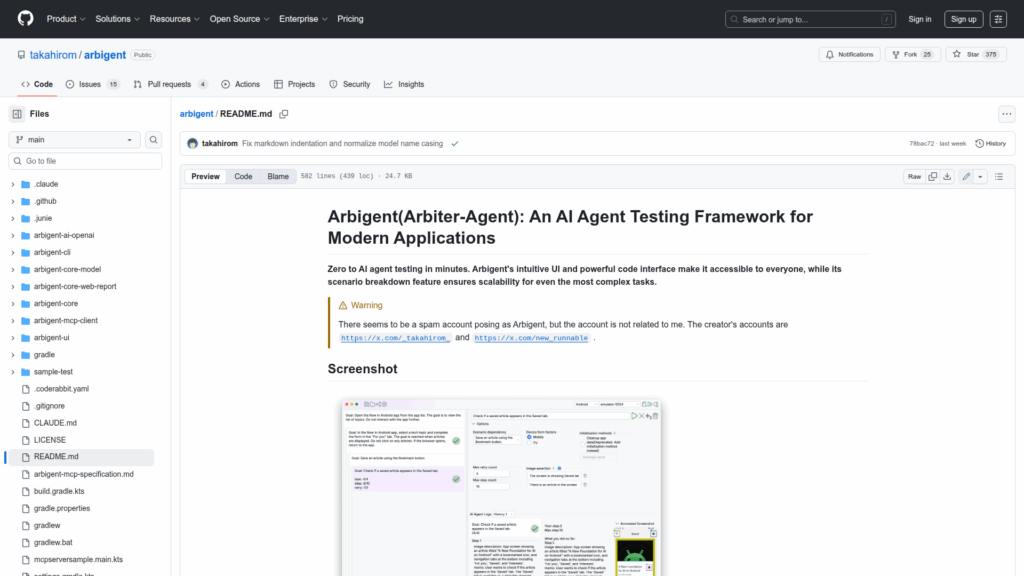

Arbigent is an open source AI agent testing framework designed to make end-to-end testing of modern applications practical by using AI agents to interact with real or emulated devices. It breaks complex goals into dependent scenarios that can be authored via a graphical UI or saved as YAML and executed programmatically or from a CLI. The project targets mobile, web and TV form factors and provides interfaces for plugging different AI providers and device drivers. It also supports integration with existing deterministic test flows by running Maestro YAML as initialization steps and can be extended with external tools via a Model Context Protocol (MCP) server configuration. The repository includes a UI binary, a Homebrew-installable CLI, example YAML project files, and Kotlin-based code examples that demonstrate loading a project file, creating agent configurations and running scenarios against connected devices.